3.5. Chapter 5: Linear Transformations#

Before Class:

Read Chapter 5 in Savov (2020) and take notes

Watch the following videos and take notes:

Review your notes on prior videos such as linear transformations and matrices and three-dimensional linear transformations

Compile a list of questions to bring to class

During and After Class:

Take notes (on paper or a tablet computer)

Complete this notebook, submit you answer via Gradescope

import numpy as np

import scipy.linalg as la

3.5.1. LU Factorization#

Addition Information:

LU factorization writes a matrix \(\mathbf{A}\) as the product of a lower triangular matrix (that is a matrix with nonzero elements on the diagonal and below) times an upper triangular matrix (that is a matrix with nonzero elements above the diagonal). In other words, \(\mathbf{A} = \mathbf{LU},\) where

and

where the \(*\) denote a potentially nonzero matrix element.

The question remains; how we find the entries in the matrices in the LU factorization? To see this, let’s look at a matrix we used in last class:

we want to find the matrix LU such that

Guess what! LU factorization is just like Gaussian elimination. \({\bf U}\) looks like our augmented matrix in row echelon form and \({\bf L}\) records the row operations. Read more here: LU Decomposition

3.5.1.1. LU Factorization in Python#

Let’s use Python to calculate the LU factorization:

# Define A

A = np.array([(3.0,2,1),(-1,4,5),(2,-8,10)])

# Perform LU decomposition

(P, L, U) = la.lu(A)

# Permutation matrix

print("P = \n",P)

# Lower diagonal matrix

print("L = \n",L)

# Upper diagonal matrix

print("U = \n",U)

# Verify result

print("P*L*U = \n",P.dot(L.dot(U)),"\n")

3.5.1.2. Permuation Matrix#

Wait, there is an extra matrix \({\bf P}\)?!?

\({\bf P}\) is the permuation matrix. For this specific example, \({\bf P}\) flips the second and third rows. This is partial pivoting.

Let’s look at some properties of \({\bf P}\).

print("inv(P) = \n",la.inv(P),"\n")

print("transpose(P) = \n",P.T,"\n")

print("P.T*P = \n",np.matmul(P.T,P),"\n")

\({\bf P}^T = {\bf P}^{-1}\) and \({\bf P}^T {\bf P} = {\bf I}\)

3.5.1.3. Solving an Linear System#

How does LU factorization help us solve a linear system? Recall,

Let’s combine these equations:

And move \({\bf P}\) from the left to the right.

Computing \({\bf P}^{T} {\bf b}\) is easy. It is just swappig columns in \({\bf b}\).

3.5.1.4. Foward Solve#

Next, let’s define \({\bf y} = {\bf U x}\). This gives:

Recall that \(\bf L\) is a lower triangular matrix. This means we can easily solve for \({\bf y}\) with forward substition.

# define b

b = np.array([6., 8, 4])

print("b =",b)

N = len(b)

# calculate P.T times b

Pb = P.T @ b

print("PT b =",Pb)

# Allocate y as an array of zeros

y = np.zeros(N)

# Loop over elements of y

for r in range(0,N):

print("\nBeginning to calculate y[",r,"]...")

# Copy value of PT b into right hand side (RHS)

RHS = Pb[r]

print("y",[r],"=",RHS)

# loop over some of the columns to evaluated summation

for c in range(0,r):

print("\t - ( y[",c,"]*L[",r,",",c,"] =",y[c],"*",L[r,c],"=",y[c]*L[r,c],")")

RHS -= y[c]*L[r,c]

# Store answer

y[r] = RHS

print("y",[r],"=",RHS)

print("\n\ny =", y)

Alternately, let’s solve with scipy:

y = la.solve(L, P.T@b)

print("y =", y)

3.5.1.5. Backsolve#

Next, we solve the linear system:

This is also easy to solve because \({\bf U}\) is upper triangular. This means we just need to do backward substitution.

# Define vector of zeros for x

x = np.zeros(N)

# Loop over elements of x backwards

for r in range(N-1,-1,-1):

print("\nBeginning to calculate x[",r,"]...")

# Copy value of y into right hand side (RHS)

RHS = y[r]

print("U[",r,",",r,"]=", U[r,r])

print("x",[r],"* U[",r,",",r,"]=",RHS)

# loop over some of the columns to evaluated summation

for c in range(r+1,N):

print("\t - ( x[",c,"]*U[",r,",",c,"] =",x[c],"*",U[r,c],"=",x[c]*U[r,c],")")

RHS -= x[c]*U[r,c]

# Store answer

x[r] = RHS/U[r,r]

print("x",[r],"=",RHS/U[r,r])

print("\n\nx =", x)

Alternatively, let’s solve with scipy:

x = la.solve(U, y)

print("x =", x)

3.5.1.6. Three Take Away Messages#

LU factorization is very similar to Gaussian elimination:

\(\mathbf{L}\) matrix saves the scaling factors from Gaussian elimination

\(\mathbf{U}\) matrix is the same as the reduced matrix

Partial pivoting works the same way for both LU factorization and Gaussian elimination

Solving a linear system with LU factorization is easy:

First, perform forward substitution, which exploits that \(\mathbf{L}\) is lower triangular

Second, perform backward substitution, which exploits that \(\mathbf{U}\) is upper triangular

LU factorization makes it easy to resolve \(\bf{A x} = {\bf b}\) for many different \(\bf{b}\) :

\(\mathbf{L}\) and \(\mathbf{U}\) save all of the steps from matrix reduction

Many nonlinear equation solving, numeric integration, and optimization algorithms exploit this trick

3.5.2. Invertible Matrix Theorem#

To paraphrase, \( A \) is a square \(n \times n\) matrix. The following statements are equivalent (all true or all false):

\(A\) is invertible

\(A\) is row equivalent to the \(n \times n\) identify matrix

\(A\) has \(n\) pivot positions

\(A x = 0\) has only the trivial solution

The columns of \(A\) form a linearly independent set

The system \(A x = b\) has at least one solution

Columns of \(A\) span \(\mathbb{R}^n\)

\(A^T\) is invertible

The rank of \(A\) is \(n\)

The determinant of \(A\) is not zero

3.5.2.1. Mass Balance Example#

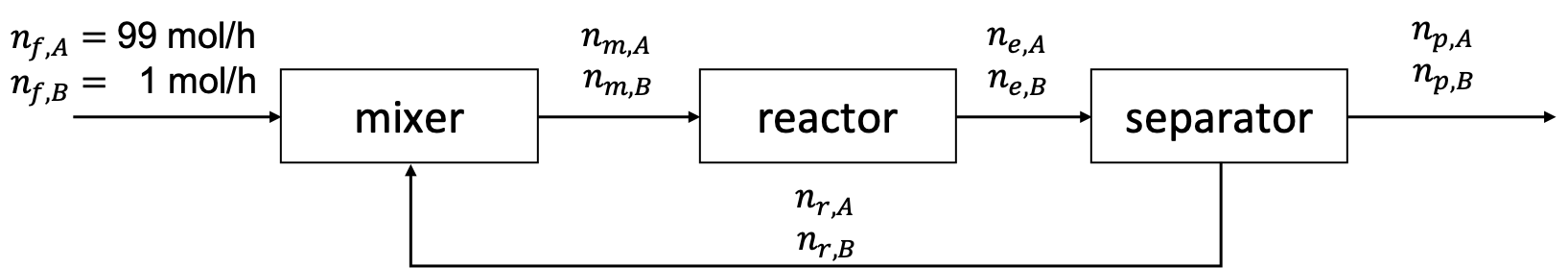

We want to convert A into valuable product B using a process with a mixer, reactor, and separator.

A feed enters at a rate of 100 moles per hour and is composed 99 mole % A and the remainder B. Unfortunately, the reactor only has a 10% per pass conversion efficiency. This means that only 10% of A that enters the reactor undergoes the reaction \(A \rightarrow B\). The separator splits the reactor effluent into two streams: the product and the recycle. The separator operates such that for every 1 mole of A in the product streams, there are 4 moles of A in the recycle stream. Likewise, for every 8 moles of B in the product stream, there is 1 mole of B in the recycle stream.

As shown in the figure above, we can model the process using component molar flowrates as variables. The first subscript is the stream (\(m\), \(e\), \(r\), or \(p\)) and the second subscript is the component (\(A\) or \(B\)).

3.5.2.2. Mathematical Model#

Your classmate proposes the following mathematical model

Equation A: \(n_{f,A} + n_{r,A} = n_{m,A}\)

Equation B: \(n_{f,B} + n_{r,B} = n_{m,B}\)

Equation C: \(0.9 ~ n_{m,A} = n_{e,A}\)

Equation D: \(n_{m,A} + n_{m,B} = n_{e,A} + n_{e,B}\)

Equation E: \(n_{e,A} = n_{p,A} + n_{r,A}\)

Equation F: \(n_{e,B} = n_{p,B} + n_{r,B}\)

Equation G: \(4 ~ n_{p,A} = n_{r,A}\)

Equation H: \(n_{p,B} = 8 ~ n_{r,B}\)

Multiple choice description options:

Entire Process: Overall mole balance

Entire Process: Component A mole balance

Process: Component B mole balance

Mixer: Overall mole balance

Mixer: Component A mole balance

Mixer: Component B mole balance

Mixer: Summation equation

Reactor: Overall mole balance

Reactor: Component A mole balance

Reactor: Component B mole balance

Reactor: 90% of A fed into reactor is converted to B

Reactor: 10% of A fed into reactor is converted to B

Separator: Overall mole balance

Separator: Component A mole balance

Separator: Component B mole balance

Separator: For every 4 moles of A in recycle, there are 1 moles of A in product

Seperator: For every 1 mole of A in recycle, there are 4 moles of A in product

Seperator: For every 8 moles of B in recycle, there are 1 moles of B in product

Seperator: For every 1 mole of B in recycle, there are 1 moles of B in product

None of the above

Hint: Choice 19 does not include a typo. It is meant to be 1 and 1.

What is the name for each equation?

# Add your solution here

Convert the above equations into a linear system in canonical form \({\bf A x} = {\bf b}\) on paper. This is excellent practice for the quiz. Finally, verify your answer matches the matrix coded below.

## Assemble A matrix

A_ex = np.zeros((8,8))

A_ex[0,0] = 1

A_ex[0,6] = -1

A_ex[1,1] = 1

A_ex[1,7] = -1

A_ex[2,0] = 0.9

A_ex[2,2] = -1

A_ex[3,0:2] = 1

A_ex[3,2:4] = -1

A_ex[4,2] = 1

A_ex[4,4] = -1

A_ex[4,6] = -1

A_ex[5,3] = 1

A_ex[5,5] = -1

A_ex[5,7] = -1

A_ex[6,4] = 4

A_ex[6,6] = -1

A_ex[7,5] = 1

A_ex[7,7] = -8

print("A=\n",A_ex)

## Assemble b vector

b_ex = np.zeros(8)

b_ex[0] = 99

b_ex[1] = 1

print("\nb=\n",b_ex)

3.5.2.3. Rank#

What is the rank of this system?

print("rank =",np.linalg.matrix_rank(A_ex))

In the space below, calculate a basis for the row space, column space, and null space. Hint: Review Chapter 4: Geometric Aspects of Linear Algebra

# Add your solution here

Use as many statements from the invertible matrix theorem as possible to predict the number of solutions to this linear system. Write your answer here as a bulletted list:

Fill in…

Fill in…

Finally, let’s solve this linear system with Python. First we will perform an LU factorization.

# Perform LU decomposition

(P, L, U) = la.lu(A_ex)

# Permutation matrix

print("P = \n",P)

# Lower diagonal matrix

print("L = \n",L)

# Upper diagonal matrix

print("U = \n",U)

# Verify result

print("P*L*U = \n",P.dot(L.dot(U)),"\n")

To LU factorization perform any pivoting?

Answer:

Now let’s solve the system:

x = la.solve(A_ex, b_ex)

def print_solution(x):

'''Print solution for this specific mole balance problem

Argument:

x: solution vector

Returns:

Nothing

'''

print("Stream description : Component = flowrate (mol/h)")

print("mixer outlet: A = ",x[0])

print("mixer effluent: B = ",x[1])

print("reactor effluent: A = ",x[2])

print("reactor effluent: B = ",x[3])

print("product: A = ",x[4])

print("product: B = ",x[5])

print("recycle: A = ",x[6])

print("recycle: B = ",x[7])

print_solution(x)

3.5.2.4. Flip order of rows#

Now let’s flip the order of rows in the linear system.

A_flip = np.flipud(A_ex.copy())

b_flip = np.flipud(b_ex.copy())

print("A=\n",A_flip)

print("\nb=\n",b_flip)

Let’s repeat the LU factorization.

# Perform LU decomposition

(P, L, U) = la.lu(A_flip)

# Permutation matrix

print("P = \n",P)

# Lower diagonal matrix

print("L = \n",L)

# Upper diagonal matrix

print("U = \n",U)

# Verify result

print("P*L*U = \n",P.dot(L.dot(U)),"\n")

Is the pivot order the same?

Now let’s solve the system of equation.

x_flip = la.solve(A_flip, b_flip)

print_solution(x_flip)

3.5.2.5. Is pivoting important?#

As homework, copy our reduced row echelon function (without pivoting) from Chapter 3: Computational linear algebra into the space below.

Next, use this function to solve the linear system of equations, both in its original form and with the rows flipped. (Do not consider the linear system in the next section with the overall mole balance.)

# Add your solution here

Explain what happened when you completed this excercise.

Is pivoting important for this specific example?

3.5.2.6. Replace the 8th equation with an overall mole balance#

A_new = A_ex.copy()

b_new = b_ex.copy()

A_new[7,:] = np.zeros(8)

A_new[7,4] = 1

A_new[7,5] = 1

b_new[7] = 100

print("A=\n",A_new)

print("\nb=\n",b_new)

What is the rank of this system?

print("rank =",np.linalg.matrix_rank(A_new))

As homework, calculate a basis for the row space, column space, and null space in the cell below.

# Add your solution here

Use as many statements from the invertible matrix theorem as possible to predict the number of solutions to this linear system. Write your answer here as a bulletted list:

Fill in…

Fill in…

Finally, let’s solve this sytem in Python.

x_new = la.solve(A_new, b_new)

print_solution(x_new)

Write a sentence to explain this result.

Answer:

3.5.3. Singular Value Decomposition#

Singular Value Decomposition (SVD) is an incredibly important tool in numeric analysis and engineering. SVD is at the core of image compression, singular processing, control and many other important applications. There is a chapter on SVD in your linear algebra textbook. I encourage you to read it.

For our purposes, we will think of SVD as another type of matrix factorization. We can represent a \(m \times n\) matrix as

where the columns of \(\mathbf{U}\) and \(\mathbf{V}\) are the left and right singular vectors:

Moreover, \(\mathbf{\Sigma}\) is a \(m \times n\) matrix of zeros with the singular values on the diagonal. For \(m > n\), we have:

Thus, we can rewrite the SVD factorization as follows (assuming \(m \geq n\)):

As homework, work out the dimensions \(\bf A\), \(\bf U\), \(\bf V\), and \(\bf {\Sigma}\) for \(m>n\) on paper using the above equaitons. What changes if \(n < m\)? Hint: Draw matrices.

Now let’s look at SVD in Python:

Asvd = np.array([(3.0,2,1),(-1,4,5),(2,-8,10),(-2,-8,10)])

print("A =\n",Asvd,"\n")

U,S,V = np.linalg.svd(Asvd)

print("U =\n",U,"\n")

print("S =\n",S,"\n")

print("V =\n",V,"\n")

Notice that Python only returns the first \(n\) elements of the diagonal \(\mathbf{\Sigma}\). Because \(m > n\), we know the remaining \(m-n = 1\) elements are zero.

Let’s check the rank of this matrix.

print("rank =",np.linalg.matrix_rank(A))

The matrix rank is 3 and \(n=3\), thus we say this matrix is full rank. Notice SVD returned 3 non-zero singular values.

Now let’s try SVD on a rank deficient matrix.

Asvd2 = np.array([[1, 1, 0],[1, -1, 2],[2, 0, 2]])

print("A = \n",Asvd2,"\n")

print("and has rank",np.linalg.matrix_rank(Asvd2))

U,S,V = np.linalg.svd(Asvd2)

print("U =\n",U,"\n")

print("S =\n",S,"\n")

print("V =\n",V,"\n")

Notice the almost zero third singular value. This matrix is numerically rank deficient. The number of non-zero singular values is the rank of a matrix.

3.5.4. Condition Number and Errors in Linear Systems#

The condition number is the ratio of the largest to smallest singular values of a matrix:

In some sense, the condition number \(\kappa\) measures how close a matrix is to singular.

The condition number also bounds the relative error in solving a linear system:

where \(\vec{\Delta b}\) is a perturbation (error) in the vector \(\vec{b}\) and \(\vec{\Delta x}\) is the corresponding or induced error in \(\vec{x}\). The operator \(|| \cdot ||\) is the \(\ell_2\) norm (i.e., length of a vector) you learned about in physics:

where \(a\) is an arbitrary \(N \times 1\) vector.

3.5.4.1. Simple Example#

Let’s revisit the motivating example from the beginning of class

# Declare matrix

A = np.matrix([(3.0,2,1),(-1,4,5),(2,-8,10)])

b = np.matrix([6,8,4])

print("A = \n",A)

print("\nb =",b)

## Calculate condition number

kappa = np.linalg.cond(A)

print("condition number =",kappa)

## Calculate the norm of a vector, such as b3

print("l2 norm of b =",np.linalg.norm(b))

Let’s say in this linear system \(\bf b\) comes from an experiment measurement with \(\pm 0.1\) absolute error for each element, thus \(\bf {\Delta b} = [0.1, 0.1, 0.1]^T\). What is the corresponding error when we solve the linear system for \(\bf x\). In other words, what is \(||\bf {\Delta x}||\)?

x = np.linalg.solve(A,np.transpose(b))

delta_b = np.array([0.1, 0.1, 0.1])

delta_b = np.transpose(delta_b)

delta_b_norm = np.linalg.norm(delta_b)

print(x)

print(np.linalg.norm(x))

print("Error in x (measured by || delta x ||) is less than or equal to ",kappa*delta_b_norm/np.linalg.norm(b) )

3.5.4.2. Mass Balance Example#

Consider the mass balance example (original version) from earlier in this notebook. What is the error in the solution of the mass balance model if \({\bf \Delta b} = (0.001) \bf{b}\), i.e., the measured flows in \(\bf{b}\) have 0.1% error?

# Add your solution here

3.5.4.3. Changing Units#

The condition number of the system with units mol/h is:

print("Condition number =",np.linalg.cond(A_ex))

What happens to the condition number if we convert the units from mol/h to mol/min?

print("Condition number =",np.linalg.cond(A_ex/60))

Explain why this happens by interpretting the SVD output below.

# Original units (mol/h)

U,S,V = np.linalg.svd(A_ex/60)

print("U =\n",U,"\n")

print("S =\n",S,"\n")

print("V =\n",V,"\n")

print("condition number=",S[0]/S[-1])

# Original units (mol/min)

U,S,V = np.linalg.svd(A_ex/60)

print("U =\n",U,"\n")

print("S =\n",S,"\n")

print("V =\n",V,"\n")

print("condition number=",S[0]/S[-1])

Answer:

Finally, let’s change the units for only the first equation and recalculate the SVD and condition number:

# Make a copy

A_ex_units = A_ex.copy()

# Convert units for row 0 (first equation) from mol/h to mol/s

A_ex_units[0,:] = A_ex_units[0,:] / 3600

U,S,V = np.linalg.svd(A_ex_units)

print("U =\n",U,"\n")

print("S =\n",S,"\n")

print("V =\n",V,"\n")

print("condition number=",S[0]/S[-1])

What happened? Was this a good idea? Write 1 or 2 sentences.

Answer:

3.5.4.4. Take Away Message#

Rank and condition number tell us sensitivity of solutions to a linear system:

Rank tells us how many linear independent equations are encoded in a matrix

Singular value decomposition does many things… we’ll use to tell if a matrix is near-singular, i.e., numerically rank deficient

Condition number tells us how \(\bf x\), the solution to a linear system, changes with uncertainty in \(\bf {b}\)