Algorithms Homework 3#

Assignment Overview: In this assignment, we will complete the function unconstrained_newton which implements four Hessian options (exact, SR1 approximation, BFGS approximation, steepest descent) and three globalization strategies (none, line search, trust region). We will then compare 10 algorithm variations on three test problems.

The best way to really learn the algorithms discussed in lecture is to implement them yourselves. Creating a function like unconstrained_newton from scratch takes a lot of time and comfort with Python. Instead, we will start with fairly complete version of unconstrained_newton. You will need to fill in the following details:

BFGS Hessian approximation

Backtracking line search with Armijo-Goldstein conditions

Trust region with Powell dogleg step

This assignment could take a long time, especially if you are still learning Python. Recognizing this, we will try a new grading policy for this assignment.

Instructions: To be eligible for full credit on the assignment, you need to:

Complete all requested pseudocode

Spend an honest 6 hours (4 hours for CBE 40499) total working on Python implementation.

Be sure to complete the Feature Status subsection.

The (hopefully) correct results for the test cases are available online in this notebook. Answer the questions using these results.

Pseudocode#

After reading through the entire assignment, please prepare pseudocode for the following functions/algorithms:

SR1 update (Python code is provided. Going from code to pseudocode is good practice.)

BFGS update

Line search

Trust region

Please turn in this pseudocode via Gradescope.

Reminder: pseudocode should not look like Python code copied to paper. Instead, pseudocode should clearly communicate the main steps of the algorithm with flow logic. Add lots of comments. It should not be programming language specific.

Unconstrained NLP Algorithm in Python#

Library of Helper Functions#

Below is a library of helpful functions. Most of these came from in class examples.

# Load required Python libraries.

import matplotlib.pyplot as plt

import numpy as np

from scipy import linalg

## Check is element of array is NaN

def check_nan(A):

return np.sum(np.isnan(A))

## Calculate gradient with central finite difference

def my_grad_approx(x,f,eps1,verbose=False):

'''

Calculate gradient of function my_f using central difference formula

Inputs:

x - point for which to evaluate gradient

f - function to consider

eps1 - perturbation size

Outputs:

grad - gradient (vector)

'''

n = len(x)

grad = np.zeros(n)

if(verbose):

print("***** my_grad_approx at x = ",x,"*****")

for i in range(0,n):

# Create vector of zeros except eps in position i

e = np.zeros(n)

e[i] = eps1

# Finite difference formula

my_f_plus = f(x + e)

my_f_minus = f(x - e)

# Diagnostics

if(verbose):

print("e[",i,"] = ",e)

print("f(x + e[",i,"]) = ",my_f_plus)

print("f(x - e[",i,"]) = ",my_f_minus)

grad[i] = (my_f_plus - my_f_minus)/(2*eps1)

if(verbose):

print("***** Done. ***** \n")

return grad

## Calculate gradient using central finite difference and my_hes_approx

def my_hes_approx(x,grad,eps2):

'''

Calculate Hessian of function my_f using central difference formula and my_grad

Inputs:

x - point for which to evaluate gradient

grad - function to calculate the gradient

eps2 - perturbation size (for Hessian NOT gradient approximation)

Outputs:

H - Hessian (matrix)

'''

n = len(x)

H = np.zeros([n,n])

for i in range(0,n):

# Create vector of zeros except eps in position i

e = np.zeros(n)

e[i] = eps2

# Evaluate gradient twice

grad_plus = grad(x + e)

grad_minus = grad(x - e)

# Notice we are building the Hessian by column (or row)

H[:,i] = (grad_plus - grad_minus)/(2*eps2)

return H

## Linear algebra calculation

def xxT(u):

'''

Calculates u*u.T to circumvent limitation with SciPy

Arguments:

u - numpy 1D array

Returns:

u*u.T

Assume u is a nx1 vector.

Recall: NumPy does not distinguish between row or column vectors

u.dot(u) returns a scalar. This functon returns an nxn matrix.

'''

n = len(u)

A = np.zeros([n,n])

for i in range(0,n):

for j in range(0,n):

A[i,j] = u[i]*u[j]

return A

## Analyze Hessian

def analyze_hes(B):

print(B,"\n")

l = linalg.eigvals(B)

print("Eigenvalues: ",l,"\n")

Main Function#

Below is the main function. You need to complete details in four functions.

def unconstrained_newton(calc_f,calc_grad,calc_hes,x0,verbose=False,max_iter=50,

algorithm="newton",globalization="line-search", # specify algorithm

eps_dx=1E-6,eps_df=1E-6, # Convergence tolerances (all)

eta_ls=0.25,rho_ls=0.9,alpha_max=1.0, # line search parameters

delta_max_tr=10.0,delta_0_tr=2.0, # trust region parameters

kappa_1_tr = 0.25, kappa_2_tr = 0.75, gamma_tr=0.125 # trust region parameters

):

'''

Newton-Type Methods for Unconstrained Nonlinear Continuous Optimization

Arguments (required):

calc_f : function f(x) to minimize [scalar]

calc_grad: gradient of f(x) [vector]

calc_hes: Hessian of f(x) [matrix]

Arguments (options):

algorithm : specify main algorithm.

choices: "newton", "sr1", "bfgs", "steepest-descent"

globalization : specify globalization strategy

choices: "none", "line-search", "trust-region"

eps_dx : tolerance for step size norm (convergence), eps1 in notes

eps_df : tolerance for gradient norm (convergence), eps2 in notes

eta_ls : parameter for Goldstein-Armijo conditions (line search only)

rho_ls : parameter to shrink (backstep) line search

alpha_max : initial step length scaling for line search and/or steepest-descent

delta_max_tr : maximum trust region size

delta_0_tr : initial trust region size

kappa_1_tr : 'shrink' tolerance for trust region

kappa_2_tr : 'expand' tolerance for trust region

gamma_tr : 'accept step' tolerance for trust region

Returns:

x : iteration history for x (decision variables) [list of numpy arrays]

f : iteration history for f(x) (objective values) [list of numpy arrays]

p : iteration history for p (steps)

B : iteration history for Hessian approximations [list of numpy arrays]

'''

# Allocate outputs as empty lists

x = [] # decision variables

f = [] # objective values

p = [] # steps

grad = [] # gradients

alpha = [] # line search / steepest descent step scalar

B = [] # Hessian approximation

delta = [] # trust region size

rho = [] # trust region actual/prediction ratio

step_accepted = [] # step status for trust region. True means accepted. False means rejected.

# Note: alpha, delta and rho will remain empty lists unless used in the algorithm below

# Store starting point

x.append(x0)

k = 0

flag = True

# Print header for iteration information

print("Iter. \tf(x) \t\t||grad(x)|| \t||p|| \t\tmin(eig(B)) \talpha \t\tdelta \t\tstep")

while flag and k < max_iter:

# Evaluate f(x) at current iteration

f.append(calc_f(x[k]))

# Evaluate gradient

grad.append(calc_grad(x[k]))

if(check_nan(grad[k])):

print("WARNING: gradiant calculation returned NaN")

break

if verbose:

print("\n")

print("k = ",k)

print("x = ",x[k])

print("grad = ",grad[k])

print("f = ",f[k])

# Calculate exact Hessian or update approximation

if(algorithm == "newton"):

B.append(calc_hes(x[k]))

elif k == 0 or algorithm == "steepest-descent":

# Initialize or set to identity

B.append(np.eye(len(x0)))

elif algorithm == "sr1" or algorithm == "bfgs":

# Change in x

s = x[k] - x[k-1]

# Change in gradient

y = grad[k] - grad[k-1]

if verbose:

print("s = ",s)

print("y = ",y)

if algorithm == "sr1": # Calculate SR1 update

dB = sr1_update(s, y, k, B, verbose)

B.append(B[k-1] + dB)

else: # Calculate BFGS update

dB = bfgs_update(s, y, k, B, verbose)

B.append(B[k-1] + dB)

else:

print("WARNING. algorithm = ",algorithm," is not supported.")

break

if verbose:

print("B = \n",B[k])

print("B[k].shape = ",B[k].shape)

if(check_nan(B[k])):

print("WARNING: Hessian update returned NaN")

break

c = np.linalg.cond(B[k])

if c > 1E12:

flag = False

print("Warning: Hessian approximation is near singular.")

print("B[k] = \n",B[k])

else:

# Solve linear system to calculate step

pk = linalg.solve(B[k],-grad[k])

if globalization == "none":

if algorithm == "steepest-descent":

# Save step and scale by alpha_max

p.append(pk*alpha_max)

else:

# Take full step

p.append(pk)

# Apply step and calculate x[k+1]

x.append(x[k] + p[k])

elif globalization == "line-search":

# Line Search Function

update, alphak = line_search(x, f, grad, calc_f, pk, k, alpha_max, eta_ls, rho_ls, verbose)

# Now the line search is complete, apply final value of alphak

p.append(update)

# Save alpha

alpha.append(alphak)

# Apply step and calculate x[k+1]

x.append(x[k] + p[k])

elif globalization == "trust-region":

# Trust region function

update = trust_region(x, grad, B, delta, k, pk, delta_0_tr, verbose)

p.append(update)

### Trust region management

# Actual reduction

ared = f[k] - calc_f(x[k] + p[k])

# Predicted reduction

pred = -(grad[k].dot(p[k]) + 0.5*p[k].dot(B[k].dot(p[k])))

# Calculate rho

if ared == 0 and pred == 0:

# This occurs is the gradient is zero and Hessian is P.D.

rho.append(1E4)

else:

rho.append(ared/pred)

if(verbose):

print("f[k] = ",f[k])

print("p[k] = ",p[k])

print("f(x[k] + p[k]) = ",calc_f(x[k] + p[k]))

print("ared = ",ared)

print("pred = ",pred)

print("rho = ",rho[k])

## Check trust region shrink/expand logic

if rho[k] < kappa_1_tr:

# Shrink trust region

delta.append(kappa_1_tr*linalg.norm(p[k]))

elif rho[k] > kappa_2_tr and np.abs(linalg.norm(p[k]) - delta[k]) < 1E-6:

# Expand trust region

delta.append(np.min([2*delta[k], delta_max_tr]))

else:

# Keep trust region same size

delta.append(delta[k])

# Compute step

if rho[k] > gamma_tr:

# Take step

x.append(x[k] + p[k])

step_accepted.append(True)

else:

# Skip step

x.append(x[k])

step_accepted.append(False)

else:

print("Warning: globalization strategy not supported")

if verbose:

print("p = ",p[k])

# Calculate norms

norm_p = linalg.norm(p[k])

norm_g = linalg.norm(grad[k])

# Calculate eigenvalues of Hessian (for display only)

min_ev = np.min(np.real(linalg.eigvals(B[k])))

'''

print("f[k] = ",f[k])

print("norm_g = ",norm_g)

print("norm_g = ",norm_p)

print("min_ev = ",min_ev)

'''

print(k,' \t{0: 1.4e} \t{1:1.4e} \t{2:1.4e} \t{3: 1.4e}'.format(f[k],norm_g,norm_p,min_ev),end='')

# Python tip. The expression 'if my_list' is false if 'my_list' is empty

if not alpha:

# alpha is an empty list

print(' \t -------',end='')

else:

# otherwise print value of alpha

print(' \t{0: 1.2e}'.format(alpha[k]),end='')

if not delta:

# delta is an empty list

print(' \t -------',end='')

else:

# otherwise print value of alpha

print(' \t{0: 1.2e}'.format(delta[k]),end='')

if not step_accepted:

print(' \t -----')

else:

if step_accepted[k]:

print(' \t accept')

else:

print(' \t reject')

# Check convergence criteria.

flag = (norm_p > eps_dx) and (norm_g > eps_df)

# Update iteration counter

k = k + 1

if(k == max_iter and flag):

print("Reached maximum number of iterations.")

print("x* = ",x[-1])

return x,f,p,B

def sr1_update(s, y, k, B, verbose):

"""

Function that implements the sr1 optimization algorithm

Inputs:

s : Change in x

y : Change in gradient

k : Iteration number

B : Hessian approximation

verbose : toggles verbose output (True or False)

Outputs:

dB : Update to Hessian approximation

"""

# SR1 formulation

u = y - B[k-1].dot(s)

denom = (u).dot(s)

# Formula: dB = u * u.T / (u.T * s) if u is a column vector.

if abs(denom) <= 1E-10:

# Skip update

dB = 0

else:

# Calculate update

dB = xxT(u)/denom

if(verbose):

print("SR1 update denominator, (y-B[k-1]*s).T*s = ",denom)

print("SR1 update u = ",u)

print("SR1 update u.T*u/(u.T*s) = \n",dB)

return dB #return the update to the Hessian approximation

def bfgs_update(s, y, k, B, verbose):

"""

Function that implements the BFGS optimization algorithm

Inputs:

s : Change in x

y : Change in gradient

k : Iteration number

B : Hessian approximation

verbose : toggles verbose output (True or False)

Outputs:

dB : Update to Hessian approximation

"""

# Define constant used to check norm of the update

# See Eq. (3.19) in Biegler (2010)

C2 = 1E-4

# Calculate intermediate

sy = s.dot(y)

# Calculate Term 2 denominator

# s.T * Bk * s

d2 = s.dot(B[k-1].dot(s))

# Condition check

C2norm = C2*linalg.norm(s,2)**2

if sy <= C2norm or d2 <= 1E-8:

skip = True

else:

skip = False

# Add your solution here

return dB #return the update to the Hessian approximation

def line_search(x, f, grad, calc_f, pk, k, alpha_max, eta_ls, rho_ls, verbose):

"""

Function that implements the line search globalization strategy

Inputs:

x : decision variables

f : objective values

grad : gradients

calc_f : function f(x) to minimize [scalar]

pk : step

k - Iteration number

alpha_max : initial step length scaling for line search and/or steepest-descent

eta_ls : parameter for Goldstein-Armijo conditions (line search only)

rho_ls : parameter to shrink (backstep) line search

verbose : toggles verbose output (True or False)

Outputs:

update : update to p

alphak : update to alpha

"""

# Flag - continue line search?

ls = True

## Initialize alpha

alphak = alpha_max

if(verbose):

print("\t LINE SEARCH")

while ls:

# Calculate test point (if step accepted)

xtest = x[k] + alphak*pk

# Evaluate f(x) at test point. This is used for Armijo and Goldstein conditions

ftest = calc_f(xtest)

# Add your solution here

# Now the line search is complete, apply final value of alphak

update = alphak*pk

return update, alphak

def trust_region(x, grad, B, delta, k, pk, delta_0_tr, verbose):

"""

Function that implements the trust region globalization strategy

Inputs:

x : decision variables

grad : gradients

B : Hessian approximation

delta : trust region size

k : Iteration number

pk : step

delta_0_tr : initial trust region size

verbose : toggles verbose output (True or False)

Outputs:

update : update to p

"""

### Initialize trust region radius

if(k == 0):

delta.append(delta_0_tr)

grad_zero = (linalg.norm(grad[k]) < 1E-14)

### Powell dogleg step

# Calculate Cauchy step (pC)

denom = grad[k].dot(B[k].dot(grad[k]))

if verbose:

print("TR: Cauchy step. grad.T*B*grad = ",denom)

if denom > 0:

# Term in ( ) is a scalar

pC = -(grad[k].dot(grad[k])/denom)*grad[k]

elif grad_zero:

pC = np.zeros(len(x[k]))

else:

pC = - delta[k]/linalg.norm(grad[k])*grad[k]

# Use Newton step (calculate above)

pN = pk

# Determine step

if linalg.norm(pN) <= delta[k]:

# Take Newton step. pN is inside the trust region.

update = pN

# Add your solution here

return update

Feature Status#

For each feature, please indicate the status upon submission.

SR1#

Status: please choose from “implemented and tested”, “implemented but testing incomplete”, “implementation incomplete”, “did not attempt implementation”

Details: Please describe in a few sentences or bullet points the outstanding tasks.

BFGS#

Status: please choose from “implemented and tested”, “implemented but testing incomplete”, “implementation incomplete”, “did not attempt implementation”

Details: Please describe in a few sentences or bullet points the outstanding tasks.

Line Search#

Status: please choose from “implemented and tested”, “implemented but testing incomplete”, “implementation incomplete”, “did not attempt implementation”

Details: Please describe in a few sentences or bullet points the outstanding tasks.

Trust Region#

Status: please choose from “implemented and tested”, “implemented but testing incomplete”, “implementation incomplete”, “did not attempt implementation”

Details: Please describe in a few sentences or bullet points the outstanding tasks.

Benchmark Tests#

In the remainder of the assignment, we will compare using several variants of Newton-type methods in different test problems taken from in class examples. For each problem at a given starting point, we will consider ten algorithm cases:

Newton method with exact Hessian and no globalization strategy

Newton method with exact Hessian and line search

Newton method with exact Hessian and Powell dogleg trust region

Quasi-Newton method with SR1 Hessian approximation and no globalization strategy

Quasi-Newton method with SR1 Hessian approximation and line search

Quasi-Newton method with SR1 Hessian approximation and Powell dogleg trust region

Quasi-Newton method with BFGS Hessian approximation and no globalization strategy

Quasi-Newton method with BFGS Hessian approximation and line search

Quasi-Newton method with BFGS Hessian approximation and Powell dogleg trust region

Steepest descent with line search

We will then assess each candidate solution by the eigenvalues of the true Hessian. The norm of the gradient is already displayed.

All of this analysis has been automated in a function below.

def check_sln(x,calc_hes):

Hval = calc_hes(x)

l, v = linalg.eig(Hval)

print("Eigenvalues of Hessian at x* = ",l)

def benchmark(x0,calc_f,calc_grad,calc_hes,verbose,cases=range(0,10)):

'''

Test 10 algorithm cases for a single starting point

Arguments:

x0 - starting point

calc_f - function to evaluate objective

calc_grad - function to evaluate gradient

calc_hes - function to evaluate Hessian

verbose - toggles verbose output (True or False)

cases - list of cases to consider. Especially helpful for debugging.

For example, setting cases=[1, 2] runs only case 2 and 3 (recall, Python starting counting at 0)

'''

for i in cases:

if i == 0:

print("***Case 1: Newton method with exact Hessian and no globalization strategy***")

x1,f,p,B = unconstrained_newton(calc_f,calc_grad,calc_hes,x0,verbose,

algorithm="newton",globalization="none")

check_sln(x1[-1],calc_hes)

print("\n")

if i == 1:

print("***Case 2: Newton method with exact Hessian and line search***")

x2,f,p,B = unconstrained_newton(calc_f,calc_grad,calc_hes,x0,verbose,

algorithm="newton",globalization="line-search")

check_sln(x2[-1],calc_hes)

print("\n")

if i == 2:

print("***Case 3: Newton method with exact Hessian and trust region***")

x3,f,p,B = unconstrained_newton(calc_f,calc_grad,calc_hes,x0,verbose,

algorithm="newton",globalization="trust-region")

check_sln(x3[-1],calc_hes)

print("\n")

if i == 3:

print("***Case 4: Quasi-Newton method with SR1 Hessian approximation and no globalization strategy***")

x4,f,p,B = unconstrained_newton(calc_f,calc_grad,calc_hes,x0,verbose,

algorithm="sr1",globalization="none")

check_sln(x4[-1],calc_hes)

print("\n")

if i == 4:

print("***Case 5: Quasi-Newton method with SR1 Hessian approximation and line search***")

x5,f,p,B = unconstrained_newton(calc_f,calc_grad,calc_hes,x0,verbose,

algorithm="sr1",globalization="line-search")

check_sln(x5[-1],calc_hes)

print("\n")

if i == 5:

print("***Case 6: Quasi-Newton method with SR1 Hessian approximation and trust region***")

x6,f,p,B = unconstrained_newton(calc_f,calc_grad,calc_hes,x0,verbose,

algorithm="sr1",globalization="trust-region")

check_sln(x6[-1],calc_hes)

print("\n")

if i == 6:

print("***Case 7: Quasi-Newton method with BFGS Hessian approximation and no globalization strategy***")

x7,f,p,B = unconstrained_newton(calc_f,calc_grad,calc_hes,x0,verbose,

algorithm="bfgs",globalization="none")

check_sln(x7[-1],calc_hes)

print("\n")

if i == 7:

print("***Case 8: Quasi-Newton method with BFGS Hessian approximation and line search***")

x8,f,p,B = unconstrained_newton(calc_f,calc_grad,calc_hes,x0,verbose,

algorithm="bfgs",globalization="line-search")

check_sln(x8[-1],calc_hes)

print("\n")

if i == 8:

print("***Case 9: Quasi-Newton method with BFGS Hessian approximation and trust region***")

x9,f,p,B = unconstrained_newton(calc_f,calc_grad,calc_hes,x0,verbose,

algorithm="bfgs",globalization="trust-region")

check_sln(x9[-1],calc_hes)

print("\n")

if i == 9:

print("***Case 10: Steepest Descent and line search***")

x10,f,p,B = unconstrained_newton(calc_f,calc_grad,calc_hes,x0,verbose,

algorithm="steepest-descent",globalization="line-search")

check_sln(x10[-1],calc_hes)

print("\n")

Quadratic Test Problem#

Start debugging your function with the following problem:

def my_f(x):

return x[0]**2 + (x[1]-1)**2

def my_grad(x):

return np.array([2*x[0], 2*(x[1] - 1) ])

def my_hes(x):

return 2*np.eye(2)

Benchmark Cases#

The following code below runs cases 1 through 10. You can specify only a subset using the cases keyword.

x0 = np.array([-3.0,2.0])

benchmark(x0,my_f,my_grad,my_hes,False)

***Case 1: Newton method with exact Hessian and no globalization strategy***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.0000e+01 6.3246e+00 3.1623e+00 2.0000e+00 ------- ------- -----

1 0.0000e+00 0.0000e+00 0.0000e+00 2.0000e+00 ------- ------- -----

x* = [0. 1.]

Eigenvalues of Hessian at x* = [2.+0.j 2.+0.j]

***Case 2: Newton method with exact Hessian and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.0000e+01 6.3246e+00 3.1623e+00 2.0000e+00 1.00e+00 ------- -----

1 0.0000e+00 0.0000e+00 0.0000e+00 2.0000e+00 1.00e+00 ------- -----

x* = [0. 1.]

Eigenvalues of Hessian at x* = [2.+0.j 2.+0.j]

***Case 3: Newton method with exact Hessian and trust region***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.0000e+01 6.3246e+00 2.0000e+00 2.0000e+00 ------- 2.00e+00 accept

1 1.3509e+00 2.3246e+00 1.1623e+00 2.0000e+00 ------- 4.00e+00 accept

2 0.0000e+00 0.0000e+00 0.0000e+00 2.0000e+00 ------- 4.00e+00 accept

x* = [0. 1.]

Eigenvalues of Hessian at x* = [2.+0.j 2.+0.j]

***Case 4: Quasi-Newton method with SR1 Hessian approximation and no globalization strategy***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.0000e+01 6.3246e+00 6.3246e+00 1.0000e+00 ------- ------- -----

1 1.0000e+01 6.3246e+00 3.1623e+00 1.0000e+00 ------- ------- -----

2 1.9722e-31 8.8818e-16 5.0634e-16 1.0000e+00 ------- ------- -----

x* = [4.4408921e-17 1.0000000e+00]

Eigenvalues of Hessian at x* = [2.+0.j 2.+0.j]

***Case 5: Quasi-Newton method with SR1 Hessian approximation and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.0000e+01 6.3246e+00 4.6106e+00 1.0000e+00 7.29e-01 ------- -----

1 2.0976e+00 2.8966e+00 1.4483e+00 1.0000e+00 1.00e+00 ------- -----

2 0.0000e+00 0.0000e+00 0.0000e+00 1.0000e+00 1.00e+00 ------- -----

x* = [0. 1.]

Eigenvalues of Hessian at x* = [2.+0.j 2.+0.j]

***Case 6: Quasi-Newton method with SR1 Hessian approximation and trust region***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.0000e+01 6.3246e+00 2.0000e+00 1.0000e+00 ------- 2.00e+00 accept

1 1.3509e+00 2.3246e+00 1.1623e+00 1.0000e+00 ------- 4.00e+00 accept

2 9.8608e-32 6.2804e-16 5.7902e-16 1.0000e+00 ------- 4.00e+00 accept

x* = [-8.8817842e-17 1.0000000e+00]

Eigenvalues of Hessian at x* = [2.+0.j 2.+0.j]

***Case 7: Quasi-Newton method with BFGS Hessian approximation and no globalization strategy***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.0000e+01 6.3246e+00 6.3246e+00 1.0000e+00 ------- ------- -----

1 1.0000e+01 6.3246e+00 3.1623e+00 1.0000e+00 ------- ------- -----

2 1.9722e-31 8.8818e-16 5.0634e-16 1.0000e+00 ------- ------- -----

x* = [4.4408921e-17 1.0000000e+00]

Eigenvalues of Hessian at x* = [2.+0.j 2.+0.j]

***Case 8: Quasi-Newton method with BFGS Hessian approximation and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.0000e+01 6.3246e+00 4.6106e+00 1.0000e+00 7.29e-01 ------- -----

1 2.0976e+00 2.8966e+00 1.4483e+00 1.0000e+00 1.00e+00 ------- -----

2 0.0000e+00 0.0000e+00 0.0000e+00 1.0000e+00 1.00e+00 ------- -----

x* = [0. 1.]

Eigenvalues of Hessian at x* = [2.+0.j 2.+0.j]

***Case 9: Quasi-Newton method with BFGS Hessian approximation and trust region***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.0000e+01 6.3246e+00 2.0000e+00 1.0000e+00 ------- 2.00e+00 accept

1 1.3509e+00 2.3246e+00 1.1623e+00 1.0000e+00 ------- 4.00e+00 accept

2 9.8608e-32 6.2804e-16 5.7902e-16 1.0000e+00 ------- 4.00e+00 accept

x* = [-8.8817842e-17 1.0000000e+00]

Eigenvalues of Hessian at x* = [2.+0.j 2.+0.j]

***Case 10: Steepest Descent and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.0000e+01 6.3246e+00 4.6106e+00 1.0000e+00 7.29e-01 ------- -----

1 2.0976e+00 2.8966e+00 2.1117e+00 1.0000e+00 7.29e-01 ------- -----

2 4.4001e-01 1.3267e+00 9.6714e-01 1.0000e+00 7.29e-01 ------- -----

3 9.2298e-02 6.0761e-01 4.4295e-01 1.0000e+00 7.29e-01 ------- -----

4 1.9361e-02 2.7829e-01 2.0287e-01 1.0000e+00 7.29e-01 ------- -----

5 4.0612e-03 1.2746e-01 9.2915e-02 1.0000e+00 7.29e-01 ------- -----

6 8.5189e-04 5.8374e-02 4.2555e-02 1.0000e+00 7.29e-01 ------- -----

7 1.7870e-04 2.6736e-02 1.9490e-02 1.0000e+00 7.29e-01 ------- -----

8 3.7484e-05 1.2245e-02 8.9265e-03 1.0000e+00 7.29e-01 ------- -----

9 7.8628e-06 5.6081e-03 4.0883e-03 1.0000e+00 7.29e-01 ------- -----

10 1.6493e-06 2.5685e-03 1.8725e-03 1.0000e+00 7.29e-01 ------- -----

11 3.4597e-07 1.1764e-03 8.5759e-04 1.0000e+00 7.29e-01 ------- -----

12 7.2572e-08 5.3879e-04 3.9277e-04 1.0000e+00 7.29e-01 ------- -----

13 1.5223e-08 2.4676e-04 1.7989e-04 1.0000e+00 7.29e-01 ------- -----

14 3.1933e-09 1.1302e-04 8.2390e-05 1.0000e+00 7.29e-01 ------- -----

15 6.6983e-10 5.1762e-05 3.7735e-05 1.0000e+00 7.29e-01 ------- -----

16 1.4051e-10 2.3707e-05 1.7282e-05 1.0000e+00 7.29e-01 ------- -----

17 2.9473e-11 1.0858e-05 7.9154e-06 1.0000e+00 7.29e-01 ------- -----

18 6.1824e-12 4.9729e-06 3.6252e-06 1.0000e+00 7.29e-01 ------- -----

19 1.2968e-12 2.2776e-06 1.6604e-06 1.0000e+00 7.29e-01 ------- -----

20 2.7203e-13 1.0431e-06 7.6044e-07 1.0000e+00 7.29e-01 ------- -----

x* = [2.26618986e-07 9.99999924e-01]

Eigenvalues of Hessian at x* = [2.+0.j 2.+0.j]

Discussion and Analysis#

Classify the solutions.

Answer:

Should we expect the SR1, BFGS, and steepest descent (all with line search) to always have the same results for the first iteration? Explain in a few sentences.

Answer:

For this problem, should we always expect all of the cases to converge to the same solution (regardless of starting point)? Explain in a few sentences.

Answer:

One-Dimensional Example#

Consider a scalar function \(f(x): \mathbb{R} \rightarrow \mathbb{R}\). Recall

## Define f(x)

f_ = lambda x : 0.5*(x[0]-1)**4 + (x[0]+1)**3 - 10*x[0]**2 + 5*x[0]

## Define f'(x)

df_ = lambda x : (6 - 8*x[0] - 3*x[0]**2 + 2*x[0]**3)*np.ones(1)

## Define f''(x)

ddf_ = lambda x : (-8 - 6*x[0] + 6*x[0]**2)*np.ones((1,1))

Benchmark Cases with \(x_0 = -3\)#

x0 = np.ones(1)*(-3.0)

benchmark(x0,f_,df_,ddf_,False)

***Case 1: Newton method with exact Hessian and no globalization strategy***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+01 5.1000e+01 7.9688e-01 6.4000e+01 ------- ------- -----

1 -8.6609e+00 1.2323e+01 3.5884e-01 3.4341e+01 ------- ------- -----

2 -1.1113e+01 1.9961e+00 8.5033e-02 2.3474e+01 ------- ------- -----

3 -1.1201e+01 1.0047e-01 4.7561e-03 2.1125e+01 ------- ------- -----

4 -1.1201e+01 3.0641e-04 1.4594e-05 2.0996e+01 ------- ------- -----

5 -1.1201e+01 2.8809e-09 1.3721e-10 2.0996e+01 ------- ------- -----

x* = [-1.75447804]

Eigenvalues of Hessian at x* = [20.99602743+0.j]

***Case 2: Newton method with exact Hessian and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+01 5.1000e+01 7.9688e-01 6.4000e+01 1.00e+00 ------- -----

1 -8.6609e+00 1.2323e+01 3.5884e-01 3.4341e+01 1.00e+00 ------- -----

2 -1.1113e+01 1.9961e+00 8.5033e-02 2.3474e+01 1.00e+00 ------- -----

3 -1.1201e+01 1.0047e-01 4.7561e-03 2.1125e+01 1.00e+00 ------- -----

4 -1.1201e+01 3.0641e-04 1.4594e-05 2.0996e+01 1.00e+00 ------- -----

5 -1.1201e+01 2.8809e-09 1.3721e-10 2.0996e+01 1.00e+00 ------- -----

x* = [-1.75447804]

Eigenvalues of Hessian at x* = [20.99602743+0.j]

***Case 3: Newton method with exact Hessian and trust region***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+01 5.1000e+01 7.9688e-01 6.4000e+01 ------- 2.00e+00 accept

1 -8.6609e+00 1.2323e+01 3.5884e-01 3.4341e+01 ------- 2.00e+00 accept

2 -1.1113e+01 1.9961e+00 8.5033e-02 2.3474e+01 ------- 2.00e+00 accept

3 -1.1201e+01 1.0047e-01 4.7561e-03 2.1125e+01 ------- 2.00e+00 accept

4 -1.1201e+01 3.0641e-04 1.4594e-05 2.0996e+01 ------- 2.00e+00 accept

5 -1.1201e+01 2.8809e-09 1.3721e-10 2.0996e+01 ------- 2.00e+00 accept

x* = [-1.75447804]

Eigenvalues of Hessian at x* = [20.99602743+0.j]

***Case 4: Quasi-Newton method with SR1 Hessian approximation and no globalization strategy***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+01 5.1000e+01 5.1000e+01 1.0000e+00 ------- ------- -----

1 2.5347e+06 2.1389e+05 5.0988e+01 4.1950e+03 ------- ------- -----

2 1.4385e+01 5.0225e+01 1.1970e-02 4.1960e+03 ------- ------- -----

3 1.3788e+01 4.9468e+01 7.8223e-01 6.3240e+01 ------- ------- -----

4 -8.7761e+00 1.1999e+01 2.5050e-01 4.7900e+01 ------- ------- -----

5 -1.0797e+01 4.4562e+00 1.4799e-01 3.0111e+01 ------- ------- -----

6 -1.1184e+01 8.7657e-01 3.6239e-02 2.4188e+01 ------- ------- -----

7 -1.1201e+01 9.3432e-02 4.3236e-03 2.1610e+01 ------- ------- -----

8 -1.1201e+01 2.3882e-03 1.1341e-04 2.1058e+01 ------- ------- -----

9 -1.1201e+01 6.8113e-06 3.2439e-07 2.0998e+01 ------- ------- -----

x* = [-1.75447804]

Eigenvalues of Hessian at x* = [20.99602743+0.j]

***Case 5: Quasi-Newton method with SR1 Hessian approximation and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+01 5.1000e+01 1.7512e+00 1.0000e+00 3.43e-02 ------- -----

1 -9.0674e+00 7.4167e+00 2.2233e-01 3.3359e+01 1.00e+00 ------- -----

2 -1.0458e+01 4.9083e+00 3.9155e-01 1.1282e+01 9.00e-01 ------- -----

3 -1.1073e+01 2.4333e+00 1.2978e-01 1.8750e+01 1.00e+00 ------- -----

4 -1.1197e+01 4.4617e-01 2.0109e-02 2.2188e+01 1.00e+00 ------- -----

5 -1.1201e+01 3.0206e-02 1.4602e-03 2.0686e+01 1.00e+00 ------- -----

6 -1.1201e+01 4.2458e-04 2.0241e-05 2.0977e+01 1.00e+00 ------- -----

Line search failure. alpha = 0.43046721000000016

7 -1.1201e+01 3.9417e-07 8.0888e-09 2.0977e+01 4.30e-01 ------- -----

x* = [-1.75447803]

Eigenvalues of Hessian at x* = [20.99602714+0.j]

***Case 6: Quasi-Newton method with SR1 Hessian approximation and trust region***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+01 5.1000e+01 2.0000e+00 1.0000e+00 ------- 2.00e+00 accept

1 -7.0000e+00 9.0000e+00 3.0000e-01 3.0000e+01 ------- 5.00e-01 accept

2 -9.4350e+00 6.9360e+00 5.0000e-01 6.8800e+00 ------- 5.00e-01 accept

3 -1.1179e+01 9.8400e-01 6.2121e-02 1.5840e+01 ------- 5.00e-01 accept

4 -1.1199e+01 3.4480e-01 1.6119e-02 2.1390e+01 ------- 5.00e-01 accept

5 -1.1201e+01 1.0073e-02 4.8506e-04 2.0766e+01 ------- 5.00e-01 accept

6 -1.1201e+01 1.0867e-04 5.1775e-06 2.0990e+01 ------- 5.00e-01 accept

7 -1.1201e+01 3.3607e-08 1.6011e-09 2.0990e+01 ------- 5.00e-01 accept

x* = [-1.75447804]

Eigenvalues of Hessian at x* = [20.99602743+0.j]

***Case 7: Quasi-Newton method with BFGS Hessian approximation and no globalization strategy***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+01 5.1000e+01 5.1000e+01 1.0000e+00 ------- ------- -----

1 2.5347e+06 2.1389e+05 5.0988e+01 4.1950e+03 ------- ------- -----

2 1.4385e+01 5.0225e+01 1.1970e-02 4.1960e+03 ------- ------- -----

3 1.3788e+01 4.9468e+01 7.8223e-01 6.3240e+01 ------- ------- -----

4 -8.7761e+00 1.1999e+01 2.5050e-01 4.7900e+01 ------- ------- -----

5 -1.0797e+01 4.4562e+00 1.4799e-01 3.0111e+01 ------- ------- -----

6 -1.1184e+01 8.7657e-01 3.6239e-02 2.4188e+01 ------- ------- -----

7 -1.1201e+01 9.3432e-02 4.3236e-03 2.1610e+01 ------- ------- -----

8 -1.1201e+01 2.3882e-03 1.1341e-04 2.1058e+01 ------- ------- -----

9 -1.1201e+01 6.8113e-06 3.2439e-07 2.0998e+01 ------- ------- -----

x* = [-1.75447804]

Eigenvalues of Hessian at x* = [20.99602743+0.j]

***Case 8: Quasi-Newton method with BFGS Hessian approximation and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+01 5.1000e+01 1.7512e+00 1.0000e+00 3.43e-02 ------- -----

1 -9.0674e+00 7.4167e+00 2.2233e-01 3.3359e+01 1.00e+00 ------- -----

2 -1.0458e+01 4.9083e+00 3.9155e-01 1.1282e+01 9.00e-01 ------- -----

3 -1.1073e+01 2.4333e+00 1.2978e-01 1.8750e+01 1.00e+00 ------- -----

4 -1.1197e+01 4.4617e-01 2.0109e-02 2.2188e+01 1.00e+00 ------- -----

5 -1.1201e+01 3.0206e-02 1.4602e-03 2.0686e+01 1.00e+00 ------- -----

6 -1.1201e+01 4.2458e-04 2.0241e-05 2.0977e+01 1.00e+00 ------- -----

Line search failure. alpha = 0.43046721000000016

7 -1.1201e+01 3.9417e-07 8.0888e-09 2.0977e+01 4.30e-01 ------- -----

x* = [-1.75447803]

Eigenvalues of Hessian at x* = [20.99602714+0.j]

***Case 9: Quasi-Newton method with BFGS Hessian approximation and trust region***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+01 5.1000e+01 2.0000e+00 1.0000e+00 ------- 2.00e+00 accept

1 -7.0000e+00 9.0000e+00 3.0000e-01 3.0000e+01 ------- 5.00e-01 accept

2 -9.4350e+00 6.9360e+00 5.0000e-01 6.8800e+00 ------- 5.00e-01 accept

3 -1.1179e+01 9.8400e-01 6.2121e-02 1.5840e+01 ------- 5.00e-01 accept

4 -1.1199e+01 3.4480e-01 1.6119e-02 2.1390e+01 ------- 5.00e-01 accept

5 -1.1201e+01 1.0073e-02 4.8506e-04 2.0766e+01 ------- 5.00e-01 accept

6 -1.1201e+01 1.0867e-04 5.1775e-06 2.0990e+01 ------- 5.00e-01 accept

7 -1.1201e+01 3.3607e-08 1.6011e-09 2.0990e+01 ------- 5.00e-01 accept

x* = [-1.75447804]

Eigenvalues of Hessian at x* = [20.99602743+0.j]

***Case 10: Steepest Descent and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+01 5.1000e+01 1.7512e+00 1.0000e+00 3.43e-02 ------- -----

1 -9.0674e+00 7.4167e+00 7.3037e-01 1.0000e+00 9.85e-02 ------- -----

2 -1.0619e+01 5.4240e+00 3.1540e-01 1.0000e+00 5.81e-02 ------- -----

3 -1.1118e+01 1.7943e+00 1.2881e-01 1.0000e+00 7.18e-02 ------- -----

4 -1.1186e+01 8.2025e-01 5.2997e-02 1.0000e+00 6.46e-02 ------- -----

5 -1.1199e+01 3.0927e-01 2.2202e-02 1.0000e+00 7.18e-02 ------- -----

6 -1.1201e+01 1.5463e-01 9.9909e-03 1.0000e+00 6.46e-02 ------- -----

7 -1.1201e+01 5.5769e-02 3.6033e-03 1.0000e+00 6.46e-02 ------- -----

8 -1.1201e+01 1.9802e-02 1.2794e-03 1.0000e+00 6.46e-02 ------- -----

9 -1.1201e+01 7.0713e-03 4.5688e-04 1.0000e+00 6.46e-02 ------- -----

10 -1.1201e+01 2.5201e-03 1.6282e-04 1.0000e+00 6.46e-02 ------- -----

11 -1.1201e+01 8.9875e-04 5.8069e-05 1.0000e+00 6.46e-02 ------- -----

12 -1.1201e+01 3.2045e-04 2.0704e-05 1.0000e+00 6.46e-02 ------- -----

13 -1.1201e+01 1.1426e-04 7.3828e-06 1.0000e+00 6.46e-02 ------- -----

14 -1.1201e+01 4.0743e-05 2.6324e-06 1.0000e+00 6.46e-02 ------- -----

15 -1.1201e+01 1.4528e-05 9.3866e-07 1.0000e+00 6.46e-02 ------- -----

x* = [-1.75447829]

Eigenvalues of Hessian at x* = [20.9960341+0.j]

Discussion#

Did all of the cases converge to the nearby solution?

Answer

Benchmark Case with \(x_0 = 0\)#

x0 = np.zeros(1)

benchmark(x0,f_,df_,ddf_,False)

***Case 1: Newton method with exact Hessian and no globalization strategy***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+00 6.0000e+00 7.5000e-01 -8.0000e+00 ------- ------- -----

1 3.4863e+00 8.4375e-01 9.2466e-02 -9.1250e+00 ------- ------- -----

2 3.5250e+00 1.1244e-02 1.2024e-03 -9.3511e+00 ------- ------- -----

3 3.5250e+00 1.3700e-06 1.4654e-07 -9.3488e+00 ------- ------- -----

x* = [0.65873679]

Eigenvalues of Hessian at x* = [-9.34881579+0.j]

***Case 2: Newton method with exact Hessian and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

Line search failure. alpha = 0.9

0 1.5000e+00 6.0000e+00 6.7500e-01 -8.0000e+00 9.00e-01 ------- -----

Line search failure. alpha = 0.9

1 3.5238e+00 1.5178e-01 1.4663e-02 -9.3163e+00 9.00e-01 ------- -----

Line search failure. alpha = 0.9

2 3.5250e+00 1.4959e-02 1.4405e-03 -9.3458e+00 9.00e-01 ------- -----

Line search failure. alpha = 0.9

3 3.5250e+00 1.4939e-03 1.4382e-04 -9.3485e+00 9.00e-01 ------- -----

Line search failure. alpha = 0.9

4 3.5250e+00 1.4937e-04 1.4380e-05 -9.3488e+00 9.00e-01 ------- -----

Line search failure. alpha = 0.9

5 3.5250e+00 1.4937e-05 1.4379e-06 -9.3488e+00 9.00e-01 ------- -----

Line search failure. alpha = 0.9

6 3.5250e+00 1.4937e-06 1.4379e-07 -9.3488e+00 9.00e-01 ------- -----

x* = [0.65873681]

Eigenvalues of Hessian at x* = [-9.34881576+0.j]

***Case 3: Newton method with exact Hessian and trust region***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+00 6.0000e+00 7.5000e-01 -8.0000e+00 ------- 2.00e+00 accept

1 3.4863e+00 8.4375e-01 9.2466e-02 -9.1250e+00 ------- 2.00e+00 accept

2 3.5250e+00 1.1244e-02 1.2024e-03 -9.3511e+00 ------- 2.00e+00 accept

3 3.5250e+00 1.3700e-06 1.4654e-07 -9.3488e+00 ------- 2.00e+00 accept

x* = [0.65873679]

Eigenvalues of Hessian at x* = [-9.34881579+0.j]

***Case 4: Quasi-Newton method with SR1 Hessian approximation and no globalization strategy***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+00 6.0000e+00 6.0000e+00 1.0000e+00 ------- ------- -----

1 6.8550e+02 4.8600e+02 5.9268e+00 8.2000e+01 ------- ------- -----

2 1.0400e+00 6.5685e+00 7.9036e-02 8.3108e+01 ------- ------- -----

3 4.9789e-01 7.1411e+00 9.8572e-01 -7.2446e+00 ------- ------- -----

4 3.3844e+00 1.5942e+00 1.7989e-01 -8.8618e+00 ------- ------- -----

5 3.5249e+00 4.7859e-02 5.2432e-03 -9.1279e+00 ------- ------- -----

6 3.5250e+00 1.1831e-03 1.2649e-04 -9.3535e+00 ------- ------- -----

7 3.5250e+00 6.0994e-07 6.5210e-08 -9.3535e+00 ------- ------- -----

x* = [0.65873679]

Eigenvalues of Hessian at x* = [-9.34881579+0.j]

***Case 5: Quasi-Newton method with SR1 Hessian approximation and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+00 6.0000e+00 2.3245e+00 1.0000e+00 3.87e-01 ------- -----

1 -6.9020e+00 1.6735e+01 8.1839e-01 9.7804e+00 4.78e-01 ------- -----

2 -1.0621e+01 4.4106e+00 1.7070e-01 2.5838e+01 1.00e+00 ------- -----

3 -1.1140e+01 1.5495e+00 9.2452e-02 1.6760e+01 1.00e+00 ------- -----

4 -1.1199e+01 3.1396e-01 1.5576e-02 2.0156e+01 1.00e+00 ------- -----

5 -1.1201e+01 1.6045e-02 7.5733e-04 2.1186e+01 1.00e+00 ------- -----

6 -1.1201e+01 1.5212e-04 7.2490e-06 2.0986e+01 1.00e+00 ------- -----

Line search failure. alpha = 0.7290000000000001

7 -1.1201e+01 7.4964e-08 2.6041e-09 2.0986e+01 7.29e-01 ------- -----

x* = [-1.75447804]

Eigenvalues of Hessian at x* = [20.99602745+0.j]

***Case 6: Quasi-Newton method with SR1 Hessian approximation and trust region***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+00 6.0000e+00 2.0000e+00 1.0000e+00 ------- 2.00e+00 accept

1 -1.0500e+01 6.0000e+00 1.0000e+00 6.0000e+00 ------- 4.00e+00 reject

2 -1.0500e+01 6.0000e+00 2.5000e-01 6.0000e+00 ------- 2.50e-01 accept

3 -1.1201e+01 9.3750e-02 3.8462e-03 2.4375e+01 ------- 2.50e-01 accept

4 -1.1201e+01 1.3262e-02 6.3371e-04 2.0927e+01 ------- 2.50e-01 accept

5 -1.1201e+01 3.8373e-05 1.8284e-06 2.0988e+01 ------- 2.50e-01 accept

6 -1.1201e+01 1.5627e-08 7.4456e-10 2.0988e+01 ------- 2.50e-01 reject

x* = [-1.75447804]

Eigenvalues of Hessian at x* = [20.99602741+0.j]

***Case 7: Quasi-Newton method with BFGS Hessian approximation and no globalization strategy***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+00 6.0000e+00 6.0000e+00 1.0000e+00 ------- ------- -----

1 6.8550e+02 4.8600e+02 5.9268e+00 8.2000e+01 ------- ------- -----

2 1.0400e+00 6.5685e+00 7.9036e-02 8.3108e+01 ------- ------- -----

3 4.9789e-01 7.1411e+00 8.5925e-02 8.3108e+01 ------- ------- -----

4 -1.4051e-01 7.7079e+00 9.2746e-02 8.3108e+01 ------- ------- -----

5 -8.8097e-01 8.2461e+00 9.9222e-02 8.3108e+01 ------- ------- -----

6 -1.7239e+00 8.7267e+00 1.0500e-01 8.3108e+01 ------- ------- -----

7 -2.6617e+00 9.1154e+00 1.0968e-01 8.3108e+01 ------- ------- -----

8 -3.6772e+00 9.3749e+00 1.1280e-01 8.3108e+01 ------- ------- -----

9 -4.7418e+00 9.4693e+00 1.1394e-01 8.3108e+01 ------- ------- -----

10 -5.8169e+00 9.3696e+00 1.0710e+01 8.7485e-01 ------- ------- -----

11 9.9445e+03 3.4106e+03 1.0681e+01 3.1933e+02 ------- ------- -----

12 -6.0910e+00 9.3100e+00 2.9076e-02 3.2020e+02 ------- ------- -----

13 -6.3607e+00 9.2367e+00 3.6644e+00 2.5207e+00 ------- ------- -----

14 2.0926e+02 2.1453e+02 3.5132e+00 6.1064e+01 ------- ------- -----

15 -7.7160e+00 8.6147e+00 1.3563e-01 6.3516e+01 ------- ------- -----

16 -8.8258e+00 7.6891e+00 1.1266e+00 6.8249e+00 ------- ------- -----

17 -6.5781e+00 1.7468e+01 7.8228e-01 2.2329e+01 ------- ------- -----

18 -1.0841e+01 3.5674e+00 1.3267e-01 2.6889e+01 ------- ------- -----

19 -1.1164e+01 1.2235e+00 6.9256e-02 1.7667e+01 ------- ------- -----

20 -1.1201e+01 1.8230e-01 8.9808e-03 2.0299e+01 ------- ------- -----

21 -1.1201e+01 7.2657e-03 3.4421e-04 2.1108e+01 ------- ------- -----

22 -1.1201e+01 4.0262e-05 1.9180e-06 2.0991e+01 ------- ------- -----

23 -1.1201e+01 8.9798e-09 4.2779e-10 2.0991e+01 ------- ------- -----

x* = [-1.75447804]

Eigenvalues of Hessian at x* = [20.99602743+0.j]

***Case 8: Quasi-Newton method with BFGS Hessian approximation and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+00 6.0000e+00 2.3245e+00 1.0000e+00 3.87e-01 ------- -----

1 -6.9020e+00 1.6735e+01 8.1839e-01 9.7804e+00 4.78e-01 ------- -----

2 -1.0621e+01 4.4106e+00 1.7070e-01 2.5838e+01 1.00e+00 ------- -----

3 -1.1140e+01 1.5495e+00 9.2452e-02 1.6760e+01 1.00e+00 ------- -----

4 -1.1199e+01 3.1396e-01 1.5576e-02 2.0156e+01 1.00e+00 ------- -----

5 -1.1201e+01 1.6045e-02 7.5733e-04 2.1186e+01 1.00e+00 ------- -----

6 -1.1201e+01 1.5212e-04 7.2490e-06 2.0986e+01 1.00e+00 ------- -----

Line search failure. alpha = 0.7290000000000001

7 -1.1201e+01 7.4964e-08 2.6041e-09 2.0986e+01 7.29e-01 ------- -----

x* = [-1.75447804]

Eigenvalues of Hessian at x* = [20.99602745+0.j]

***Case 9: Quasi-Newton method with BFGS Hessian approximation and trust region***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+00 6.0000e+00 2.0000e+00 1.0000e+00 ------- 2.00e+00 accept

1 -1.0500e+01 6.0000e+00 1.0000e+00 6.0000e+00 ------- 4.00e+00 reject

2 -1.0500e+01 6.0000e+00 2.5000e-01 6.0000e+00 ------- 2.50e-01 accept

3 -1.1201e+01 9.3750e-02 3.8462e-03 2.4375e+01 ------- 2.50e-01 accept

4 -1.1201e+01 1.3262e-02 6.3371e-04 2.0927e+01 ------- 2.50e-01 accept

5 -1.1201e+01 3.8373e-05 1.8284e-06 2.0988e+01 ------- 2.50e-01 accept

6 -1.1201e+01 1.5627e-08 7.4456e-10 2.0988e+01 ------- 2.50e-01 reject

x* = [-1.75447804]

Eigenvalues of Hessian at x* = [20.99602741+0.j]

***Case 10: Steepest Descent and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.5000e+00 6.0000e+00 2.3245e+00 1.0000e+00 3.87e-01 ------- -----

1 -6.9020e+00 1.6735e+01 7.8823e-01 1.0000e+00 4.71e-02 ------- -----

2 -1.0747e+01 3.9578e+00 3.1570e-01 1.0000e+00 7.98e-02 ------- -----

3 -1.1097e+01 2.1780e+00 1.4072e-01 1.0000e+00 6.46e-02 ------- -----

4 -1.1182e+01 8.8201e-01 6.3319e-02 1.0000e+00 7.18e-02 ------- -----

5 -1.1197e+01 4.2785e-01 2.7644e-02 1.0000e+00 6.46e-02 ------- -----

6 -1.1201e+01 1.5728e-01 1.1291e-02 1.0000e+00 7.18e-02 ------- -----

7 -1.1201e+01 7.9215e-02 5.1182e-03 1.0000e+00 6.46e-02 ------- -----

8 -1.1201e+01 2.8413e-02 1.8358e-03 1.0000e+00 6.46e-02 ------- -----

9 -1.1201e+01 1.0109e-02 6.5318e-04 1.0000e+00 6.46e-02 ------- -----

10 -1.1201e+01 3.6075e-03 2.3308e-04 1.0000e+00 6.46e-02 ------- -----

11 -1.1201e+01 1.2860e-03 8.3088e-05 1.0000e+00 6.46e-02 ------- -----

12 -1.1201e+01 4.5858e-04 2.9629e-05 1.0000e+00 6.46e-02 ------- -----

13 -1.1201e+01 1.6351e-04 1.0565e-05 1.0000e+00 6.46e-02 ------- -----

14 -1.1201e+01 5.8304e-05 3.7671e-06 1.0000e+00 6.46e-02 ------- -----

15 -1.1201e+01 2.0789e-05 1.3432e-06 1.0000e+00 6.46e-02 ------- -----

16 -1.1201e+01 7.4129e-06 4.7895e-07 1.0000e+00 6.46e-02 ------- -----

x* = [-1.75447817]

Eigenvalues of Hessian at x* = [20.99603083+0.j]

Discussion#

Which cases converged to a local maximizer?

Answer:

Which cases converged to a local minimizer?

Answer:

Based on these results, which algorithms do you recommend for non-convex problems?

Answer:

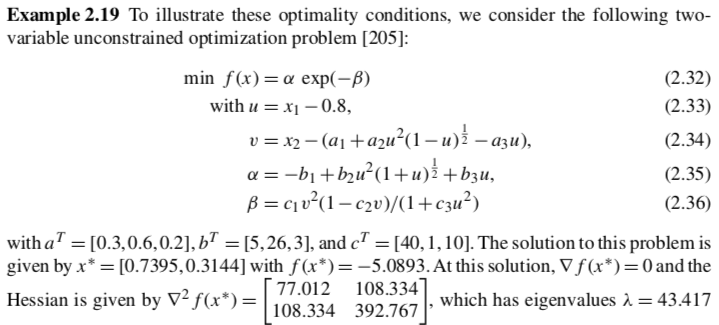

Return of Example 2.19#

Now we will benchmark the algorithms using Example 2.19.

Consider the function \(g(z) = \sqrt{z}\), which is only defined for \(z \geq 0\). We will shortly learn how handle bounds/inequality constraints in an optimization problem.

But for this assignment, we will focus on algorithms for unconstrained nonlinear optimization. We will use a smoothed approximation to ensure \(g(z)\) is defined and twice-differentiable over \(z \in \mathbb{R}\). Consider:

This ensures that \(g(z)\) is defined over \(z \in \mathbb{R}\). But what about twice-differentiable? We will further approximate

Below is an implementation of the Example 2.19 that uses this smoothed approximation.

def asqrt(z):

'''

Approximate/smoothed square root

'''

return np.sqrt(0.5*(np.sqrt(z**2 + 1E-4) + z))

def ex2_19_smoothed(x,verbose=False):

''' Evaluate function given above at point x

Inputs:

x - vector with 2 elements

Outputs:

f - function value (scalar)

'''

# Constants

a = np.array([0.3, 0.6, 0.2])

b = np.array([5, 26, 3])

c = np.array([40, 1, 10])

# Intermediates. Recall Python indicies start at 0

u = x[0] - 0.8

s = asqrt(1-u)

s2 = asqrt(1+u)

v = x[1] -(a[0] + a[1]*u**2*s-a[2]*u)

alpha = -b[0] + b[1]*u**2*s2+ b[2]*u

beta = c[0]*v**2*(1-c[1]*v)/(1+c[2]*u**2)

f = alpha*np.exp(-beta)

if verbose:

print("##### my_f at x = ",x, "#####")

print("u = ",u)

print("sqrt(1-u) = ",s)

print("sqrt(1+u) = ",s2)

print("v = ",v)

print("alpha = ",alpha)

print("beta = ",beta)

print("f(x) = ",f)

print("##### Done. #####\n")

return f

my_f2 = lambda x : ex2_19_smoothed(x,verbose=False)

my_grad2 = lambda x : my_grad_approx(x,my_f2,1E-6,verbose=False)

my_hes2 = lambda x : my_hes_approx(x,my_grad2,1E-6)

Benchmark with \(x_0\) Near Solution#

x0 = np.array([0.7, 0.3])

benchmark(x0,my_f2,my_grad2,my_hes2,False)

***Case 1: Newton method with exact Hessian and no globalization strategy***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 -4.9246e+00 1.0874e+01 4.1947e-02 4.9370e+01 ------- ------- -----

1 -5.0888e+00 6.2734e-01 1.9755e-03 4.2542e+01 ------- ------- -----

2 -5.0893e+00 2.4208e-03 3.0022e-05 4.3424e+01 ------- ------- -----

3 -5.0893e+00 2.9175e-07 3.1334e-09 4.3418e+01 ------- ------- -----

x* = [0.73950642 0.31435986]

Eigenvalues of Hessian at x* = [ 43.41806155+0.j 426.36193114+0.j]

***Case 2: Newton method with exact Hessian and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 -4.9246e+00 1.0874e+01 4.1947e-02 4.9370e+01 1.00e+00 ------- -----

1 -5.0888e+00 6.2734e-01 1.9755e-03 4.2542e+01 1.00e+00 ------- -----

2 -5.0893e+00 2.4208e-03 3.0022e-05 4.3424e+01 1.00e+00 ------- -----

3 -5.0893e+00 2.9175e-07 3.1334e-09 4.3418e+01 1.00e+00 ------- -----

x* = [0.73950642 0.31435986]

Eigenvalues of Hessian at x* = [ 43.41806155+0.j 426.36193114+0.j]

***Case 3: Newton method with exact Hessian and trust region***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 -4.9246e+00 1.0874e+01 4.1947e-02 4.9370e+01 ------- 2.00e+00 accept

1 -5.0888e+00 6.2734e-01 1.9755e-03 4.2542e+01 ------- 2.00e+00 accept

2 -5.0893e+00 2.4208e-03 3.0022e-05 4.3424e+01 ------- 2.00e+00 accept

3 -5.0893e+00 2.9175e-07 3.1334e-09 4.3418e+01 ------- 2.00e+00 reject

x* = [0.73950642 0.31435986]

Eigenvalues of Hessian at x* = [ 43.41820666+0.j 426.36200807+0.j]

***Case 4: Quasi-Newton method with SR1 Hessian approximation and no globalization strategy***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 -4.9246e+00 1.0874e+01 1.0874e+01 1.0000e+00 ------- ------- -----

Warning: Hessian approximation is near singular.

B[k] =

[[ 2.40566051e+93 -1.88095467e+93]

[-1.88095467e+93 1.47069399e+93]]

x* = [ 5.43705574 10.08833764]

Eigenvalues of Hessian at x* = [ 8.63954930e+95+0.j -6.55410896e+91+0.j]

***Case 5: Quasi-Newton method with SR1 Hessian approximation and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 -4.9246e+00 1.0874e+01 3.6770e-02 1.0000e+00 3.38e-03 ------- -----

1 -5.0500e+00 4.4205e+00 4.4992e-02 1.0000e+00 3.82e-02 ------- -----

2 -5.0674e+00 3.6723e+00 1.8961e-02 3.7784e+01 1.00e+00 ------- -----

3 -5.0892e+00 9.7210e-02 2.4549e-04 3.7780e+01 1.00e+00 ------- -----

4 -5.0893e+00 5.8514e-03 4.0135e-05 3.9433e+01 1.00e+00 ------- -----

5 -5.0893e+00 1.4377e-04 3.2683e-06 4.3406e+01 1.00e+00 ------- -----

6 -5.0893e+00 2.0937e-07 1.1207e-09 4.3406e+01 1.00e+00 ------- -----

x* = [0.73950642 0.31435986]

Eigenvalues of Hessian at x* = [ 43.41818718+0.j 426.3618055 +0.j]

***Case 6: Quasi-Newton method with SR1 Hessian approximation and trust region***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 -4.9246e+00 1.0874e+01 2.0000e+00 1.0000e+00 ------- 2.00e+00 reject

1 -4.9246e+00 1.0874e+01 5.0000e-01 1.0000e+00 ------- 5.00e-01 reject

2 -4.9246e+00 1.0874e+01 1.2500e-01 1.0000e+00 ------- 1.25e-01 reject

3 -4.9246e+00 1.0874e+01 3.1250e-02 1.0000e+00 ------- 3.12e-02 accept

4 -5.0665e+00 2.3568e+00 3.1250e-02 1.0000e+00 ------- 3.12e-02 accept

5 -5.0881e+00 9.8076e-01 3.0776e-03 4.0771e+01 ------- 3.12e-02 accept

6 -5.0892e+00 4.6629e-02 4.8788e-04 4.4036e+01 ------- 3.12e-02 accept

7 -5.0893e+00 5.4140e-04 8.7158e-06 4.3387e+01 ------- 3.12e-02 accept

8 -5.0893e+00 1.7327e-06 1.1790e-08 4.3387e+01 ------- 3.12e-02 accept

x* = [0.73950642 0.31435986]

Eigenvalues of Hessian at x* = [ 43.41840922+0.j 426.36202755+0.j]

***Case 7: Quasi-Newton method with BFGS Hessian approximation and no globalization strategy***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 -4.9246e+00 1.0874e+01 1.0874e+01 1.0000e+00 ------- ------- -----

Warning: Hessian approximation is near singular.

B[k] =

[[ 2.40566051e+93 -1.88095467e+93]

[-1.88095467e+93 1.47069399e+93]]

x* = [ 5.43705574 10.08833764]

Eigenvalues of Hessian at x* = [ 8.63954930e+95+0.j -6.55410896e+91+0.j]

***Case 8: Quasi-Newton method with BFGS Hessian approximation and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 -4.9246e+00 1.0874e+01 3.6770e-02 1.0000e+00 3.38e-03 ------- -----

1 -5.0500e+00 4.4205e+00 4.5530e-02 9.8819e-01 3.82e-02 ------- -----

2 -5.0671e+00 3.6634e+00 1.9685e-02 3.7814e+01 1.00e+00 ------- -----

3 -5.0893e+00 8.3284e-03 2.0822e-04 3.8028e+01 1.00e+00 ------- -----

4 -5.0893e+00 1.6840e-03 2.6639e-05 4.3370e+01 1.00e+00 ------- -----

5 -5.0893e+00 3.4725e-05 8.9062e-08 4.3207e+01 1.00e+00 ------- -----

x* = [0.73950642 0.31435986]

Eigenvalues of Hessian at x* = [ 43.41837027+0.j 426.36162242+0.j]

***Case 9: Quasi-Newton method with BFGS Hessian approximation and trust region***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 -4.9246e+00 1.0874e+01 2.0000e+00 1.0000e+00 ------- 2.00e+00 reject

1 -4.9246e+00 1.0874e+01 5.0000e-01 1.0000e+00 ------- 5.00e-01 reject

2 -4.9246e+00 1.0874e+01 1.2500e-01 1.0000e+00 ------- 1.25e-01 reject

3 -4.9246e+00 1.0874e+01 3.1250e-02 1.0000e+00 ------- 3.12e-02 accept

4 -5.0665e+00 2.3568e+00 3.1250e-02 9.8768e-01 ------- 3.12e-02 accept

5 -5.0881e+00 9.8103e-01 3.1250e-02 6.6632e+00 ------- 3.12e-02 reject

6 -5.0881e+00 9.8103e-01 7.8125e-03 6.6632e+00 ------- 7.81e-03 reject

7 -5.0881e+00 9.8103e-01 1.9531e-03 6.6632e+00 ------- 1.95e-03 accept

8 -5.0892e+00 1.5608e-01 1.9531e-03 7.0647e+00 ------- 1.95e-03 accept

9 -5.0892e+00 2.7758e-02 7.6768e-04 3.0614e+01 ------- 1.95e-03 accept

10 -5.0893e+00 4.1299e-02 1.9410e-04 4.1031e+01 ------- 1.95e-03 accept

11 -5.0893e+00 2.1703e-02 8.1046e-05 3.6818e+01 ------- 1.95e-03 accept

12 -5.0893e+00 9.3297e-04 1.7774e-05 3.9135e+01 ------- 1.95e-03 accept

13 -5.0893e+00 1.0689e-04 1.5964e-06 4.3341e+01 ------- 1.95e-03 accept

14 -5.0893e+00 4.6643e-06 1.3806e-08 4.3341e+01 ------- 1.95e-03 accept

x* = [0.73950642 0.31435986]

Eigenvalues of Hessian at x* = [ 43.41838974+0.j 426.36182498+0.j]

***Case 10: Steepest Descent and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 -4.9246e+00 1.0874e+01 3.6770e-02 1.0000e+00 3.38e-03 ------- -----

1 -5.0500e+00 4.4205e+00 1.6608e-02 1.0000e+00 3.76e-03 ------- -----

2 -5.0703e+00 2.5775e+00 9.6841e-03 1.0000e+00 3.76e-03 ------- -----

3 -5.0783e+00 1.6164e+00 7.4976e-03 1.0000e+00 4.64e-03 ------- -----

4 -5.0815e+00 1.4787e+00 6.1731e-03 1.0000e+00 4.17e-03 ------- -----

5 -5.0842e+00 1.1500e+00 4.8006e-03 1.0000e+00 4.17e-03 ------- -----

6 -5.0859e+00 8.9734e-01 4.1622e-03 1.0000e+00 4.64e-03 ------- -----

7 -5.0869e+00 8.2888e-01 3.4602e-03 1.0000e+00 4.17e-03 ------- -----

8 -5.0877e+00 6.4810e-01 2.7055e-03 1.0000e+00 4.17e-03 ------- -----

9 -5.0883e+00 5.0889e-01 2.1244e-03 1.0000e+00 4.17e-03 ------- -----

10 -5.0886e+00 4.0020e-01 1.8563e-03 1.0000e+00 4.64e-03 ------- -----

11 -5.0888e+00 3.7147e-01 1.5507e-03 1.0000e+00 4.17e-03 ------- -----

12 -5.0890e+00 2.9181e-01 1.2182e-03 1.0000e+00 4.17e-03 ------- -----

13 -5.0891e+00 2.2973e-01 9.5903e-04 1.0000e+00 4.17e-03 ------- -----

14 -5.0891e+00 1.8105e-01 7.5581e-04 1.0000e+00 4.17e-03 ------- -----

15 -5.0892e+00 1.4294e-01 6.6299e-04 1.0000e+00 4.64e-03 ------- -----

16 -5.0892e+00 1.3277e-01 5.5425e-04 1.0000e+00 4.17e-03 ------- -----

17 -5.0892e+00 1.0457e-01 4.3654e-04 1.0000e+00 4.17e-03 ------- -----

18 -5.0892e+00 8.2435e-02 3.4413e-04 1.0000e+00 4.17e-03 ------- -----

19 -5.0892e+00 6.5066e-02 2.7162e-04 1.0000e+00 4.17e-03 ------- -----

20 -5.0892e+00 5.1406e-02 2.3844e-04 1.0000e+00 4.64e-03 ------- -----

21 -5.0892e+00 4.7773e-02 1.9943e-04 1.0000e+00 4.17e-03 ------- -----

22 -5.0893e+00 3.7645e-02 1.5715e-04 1.0000e+00 4.17e-03 ------- -----

23 -5.0893e+00 2.9694e-02 1.2396e-04 1.0000e+00 4.17e-03 ------- -----

24 -5.0893e+00 2.3443e-02 9.7864e-05 1.0000e+00 4.17e-03 ------- -----

25 -5.0893e+00 1.8527e-02 8.5936e-05 1.0000e+00 4.64e-03 ------- -----

26 -5.0893e+00 1.7227e-02 7.1917e-05 1.0000e+00 4.17e-03 ------- -----

27 -5.0893e+00 1.3578e-02 5.6681e-05 1.0000e+00 4.17e-03 ------- -----

28 -5.0893e+00 1.0710e-02 4.4711e-05 1.0000e+00 4.17e-03 ------- -----

29 -5.0893e+00 8.4562e-03 3.5301e-05 1.0000e+00 4.17e-03 ------- -----

30 -5.0893e+00 6.6826e-03 3.0997e-05 1.0000e+00 4.64e-03 ------- -----

31 -5.0893e+00 6.2174e-03 2.5955e-05 1.0000e+00 4.17e-03 ------- -----

32 -5.0893e+00 4.8999e-03 2.0455e-05 1.0000e+00 4.17e-03 ------- -----

33 -5.0893e+00 3.8650e-03 1.6134e-05 1.0000e+00 4.17e-03 ------- -----

34 -5.0893e+00 3.0512e-03 1.2738e-05 1.0000e+00 4.17e-03 ------- -----

35 -5.0893e+00 2.4111e-03 1.1184e-05 1.0000e+00 4.64e-03 ------- -----

36 -5.0893e+00 2.2445e-03 9.3697e-06 1.0000e+00 4.17e-03 ------- -----

37 -5.0893e+00 1.7687e-03 7.3837e-06 1.0000e+00 4.17e-03 ------- -----

38 -5.0893e+00 1.3950e-03 5.8235e-06 1.0000e+00 4.17e-03 ------- -----

39 -5.0893e+00 1.1012e-03 4.5969e-06 1.0000e+00 4.17e-03 ------- -----

40 -5.0893e+00 8.7002e-04 4.0355e-06 1.0000e+00 4.64e-03 ------- -----

41 -5.0893e+00 8.1036e-04 3.3829e-06 1.0000e+00 4.17e-03 ------- -----

42 -5.0893e+00 6.3853e-04 2.6656e-06 1.0000e+00 4.17e-03 ------- -----

43 -5.0893e+00 5.0354e-04 2.1020e-06 1.0000e+00 4.17e-03 ------- -----

44 -5.0893e+00 3.9742e-04 1.6591e-06 1.0000e+00 4.17e-03 ------- -----

45 -5.0893e+00 3.1396e-04 1.4563e-06 1.0000e+00 4.64e-03 ------- -----

46 -5.0893e+00 2.9259e-04 1.2215e-06 1.0000e+00 4.17e-03 ------- -----

47 -5.0893e+00 2.3052e-04 9.6233e-07 1.0000e+00 4.17e-03 ------- -----

x* = [0.73950438 0.31436011]

Eigenvalues of Hessian at x* = [ 43.41886108+0.j 426.36201978+0.j]

Discussion#

Which cases do not converge to the known solution?

Answer:

What is happening with the SR1 and BFGS update when no globalization strategy is used? Hint: This is related to the square root approximation.

Answer:

Benchmark with \(x_0\) Far From the Solution#

x0 = np.array([0.0, 0.0])

benchmark(x0,my_f2,my_grad2,my_hes2,False)

***Case 1: Newton method with exact Hessian and no globalization strategy***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.7116e-06 1.5723e-04 3.8594e-02 -2.9410e-03 ------- ------- -----

1 5.7117e-07 5.5951e-05 3.6202e-02 -1.0859e-03 ------- ------- -----

2 1.9240e-07 1.9977e-05 3.4172e-02 -4.0313e-04 ------- ------- -----

3 6.5310e-08 7.1521e-06 3.2420e-02 -1.5022e-04 ------- ------- -----

4 2.2312e-08 2.5663e-06 3.0889e-02 -5.6122e-05 ------- ------- -----

5 7.6637e-09 9.2257e-07 2.9536e-02 -2.1007e-05 ------- ------- -----

x* = [-0.00313333 -0.20178778]

Eigenvalues of Hessian at x* = [ 1.45576374e-05+0.j -7.87341863e-06+0.j]

***Case 2: Newton method with exact Hessian and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.7116e-06 1.5723e-04 3.8594e-02 -2.9410e-03 1.00e+00 ------- -----

1 5.7117e-07 5.5951e-05 3.6202e-02 -1.0859e-03 1.00e+00 ------- -----

2 1.9240e-07 1.9977e-05 3.4172e-02 -4.0313e-04 1.00e+00 ------- -----

3 6.5310e-08 7.1521e-06 3.2420e-02 -1.5022e-04 1.00e+00 ------- -----

4 2.2312e-08 2.5663e-06 3.0889e-02 -5.6122e-05 1.00e+00 ------- -----

5 7.6637e-09 9.2257e-07 2.9536e-02 -2.1007e-05 1.00e+00 ------- -----

x* = [-0.00313333 -0.20178778]

Eigenvalues of Hessian at x* = [ 1.45576374e-05+0.j -7.87341863e-06+0.j]

***Case 3: Newton method with exact Hessian and trust region***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.7116e-06 1.5723e-04 3.8594e-02 -2.9410e-03 ------- 2.00e+00 accept

1 5.7117e-07 5.5951e-05 3.6202e-02 -1.0859e-03 ------- 2.00e+00 accept

2 1.9240e-07 1.9977e-05 3.4172e-02 -4.0313e-04 ------- 2.00e+00 accept

3 6.5310e-08 7.1521e-06 3.2420e-02 -1.5022e-04 ------- 2.00e+00 accept

4 2.2312e-08 2.5663e-06 3.0889e-02 -5.6122e-05 ------- 2.00e+00 accept

5 7.6637e-09 9.2257e-07 2.9536e-02 -2.1007e-05 ------- 2.00e+00 accept

x* = [-0.00313333 -0.20178778]

Eigenvalues of Hessian at x* = [ 1.45576374e-05+0.j -7.87341863e-06+0.j]

***Case 4: Quasi-Newton method with SR1 Hessian approximation and no globalization strategy***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.7116e-06 1.5723e-04 1.5723e-04 1.0000e+00 ------- ------- -----

1 1.6869e-06 1.5677e-04 5.3086e-02 2.9530e-03 ------- ------- -----

2 -2.3989e-06 7.2336e-05 4.0934e-03 2.7417e-03 ------- ------- -----

3 -2.4364e-06 7.1078e-05 3.7770e-03 1.5844e-03 ------- ------- -----

4 -2.4551e-06 7.0156e-05 1.6393e-03 1.4153e-03 ------- ------- -----

5 -2.4788e-06 7.0331e-05 7.1786e-02 8.7441e-04 ------- ------- -----

6 -1.3803e-05 3.5715e-04 7.7165e-02 -4.7635e-03 ------- ------- -----

7 -1.8335e-06 5.2108e-05 1.2853e-02 -4.0717e-03 ------- ------- -----

8 -1.2703e-06 3.6881e-05 3.1009e-02 -1.1894e-03 ------- ------- -----

9 -5.0534e-07 1.5390e-05 2.2172e-02 -6.9356e-04 ------- ------- -----

10 -2.5506e-07 8.0241e-06 2.4104e-02 -3.3191e-04 ------- ------- -----

11 -1.1847e-07 3.8571e-06 2.2260e-02 -1.7236e-04 ------- ------- -----

12 -5.7063e-08 1.9164e-06 2.1932e-02 -8.6740e-05 ------- ------- -----

13 -2.7199e-08 9.4119e-07 2.1123e-02 -4.4158e-05 ------- ------- -----

x* = [-0.08873597 -0.1844557 ]

Eigenvalues of Hessian at x* = [ 3.41429300e-06+0.j -1.58482887e-05+0.j]

***Case 5: Quasi-Newton method with SR1 Hessian approximation and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.7116e-06 1.5723e-04 1.5723e-04 1.0000e+00 1.00e+00 ------- -----

1 1.6869e-06 1.5677e-04 5.3086e-02 2.9530e-03 1.00e+00 ------- -----

2 -2.3989e-06 7.2336e-05 4.0934e-03 2.7417e-03 1.00e+00 ------- -----

3 -2.4364e-06 7.1078e-05 3.7770e-03 1.5844e-03 1.00e+00 ------- -----

4 -2.4551e-06 7.0156e-05 1.6393e-03 1.4153e-03 1.00e+00 ------- -----

5 -2.4788e-06 7.0331e-05 7.1786e-02 8.7441e-04 1.00e+00 ------- -----

Line search failure. alpha = 0.9

6 -1.3803e-05 3.5715e-04 6.9449e-02 -4.7635e-03 9.00e-01 ------- -----

Line search failure. alpha = 4.353241455684411e-14

7 -2.2680e-06 6.3846e-05 6.4013e-16 -4.3566e-03 4.35e-14 ------- -----

x* = [-0.08201558 -0.02174002]

Eigenvalues of Hessian at x* = [ 0.00067133+0.j -0.00170098+0.j]

***Case 6: Quasi-Newton method with SR1 Hessian approximation and trust region***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.7116e-06 1.5723e-04 1.5723e-04 1.0000e+00 ------- 2.00e+00 accept

1 1.6869e-06 1.5677e-04 5.3086e-02 2.9530e-03 ------- 2.00e+00 accept

2 -2.3989e-06 7.2336e-05 4.0934e-03 2.7417e-03 ------- 2.00e+00 accept

3 -2.4364e-06 7.1078e-05 3.7770e-03 1.5844e-03 ------- 2.00e+00 accept

4 -2.4551e-06 7.0156e-05 1.6393e-03 1.4153e-03 ------- 2.00e+00 accept

5 -2.4788e-06 7.0331e-05 7.1786e-02 8.7441e-04 ------- 2.00e+00 accept

6 -1.3803e-05 3.5715e-04 7.7165e-02 -4.7635e-03 ------- 2.00e+00 accept

7 -1.8335e-06 5.2108e-05 1.2853e-02 -4.0717e-03 ------- 2.00e+00 accept

8 -1.2703e-06 3.6881e-05 3.1009e-02 -1.1894e-03 ------- 2.00e+00 accept

9 -5.0534e-07 1.5390e-05 2.2172e-02 -6.9356e-04 ------- 2.00e+00 accept

10 -2.5506e-07 8.0241e-06 2.4104e-02 -3.3191e-04 ------- 2.00e+00 accept

11 -1.1847e-07 3.8571e-06 2.2260e-02 -1.7236e-04 ------- 2.00e+00 accept

12 -5.7063e-08 1.9164e-06 2.1932e-02 -8.6740e-05 ------- 2.00e+00 accept

13 -2.7199e-08 9.4119e-07 2.1123e-02 -4.4158e-05 ------- 2.00e+00 accept

x* = [-0.08873597 -0.1844557 ]

Eigenvalues of Hessian at x* = [ 3.41429300e-06+0.j -1.58482887e-05+0.j]

***Case 7: Quasi-Newton method with BFGS Hessian approximation and no globalization strategy***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.7116e-06 1.5723e-04 1.5723e-04 1.0000e+00 ------- ------- -----

1 1.6869e-06 1.5677e-04 5.3086e-02 2.9531e-03 ------- ------- -----

2 -2.3988e-06 7.2335e-05 4.0930e-03 2.7417e-03 ------- ------- -----

3 -2.4363e-06 7.1075e-05 3.7674e-03 1.5843e-03 ------- ------- -----

4 -2.4541e-06 7.0132e-05 1.4838e-03 1.4144e-03 ------- ------- -----

5 -2.4625e-06 6.9939e-05 1.3871e-04 1.4144e-03 ------- ------- -----

6 -2.4692e-06 7.0090e-05 9.6823e-05 1.4144e-03 ------- ------- -----

7 -2.4760e-06 7.0270e-05 9.8466e-05 1.4144e-03 ------- ------- -----

8 -2.4828e-06 7.0453e-05 9.8927e-05 1.4144e-03 ------- ------- -----

9 -2.4897e-06 7.0637e-05 9.9206e-05 1.4144e-03 ------- ------- -----

10 -2.4965e-06 7.0822e-05 9.9468e-05 1.4144e-03 ------- ------- -----

11 -2.5035e-06 7.1008e-05 9.9729e-05 1.4144e-03 ------- ------- -----

12 -2.5104e-06 7.1195e-05 9.9992e-05 1.4144e-03 ------- ------- -----

13 -2.5174e-06 7.1383e-05 1.0026e-04 1.4144e-03 ------- ------- -----

14 -2.5244e-06 7.1572e-05 1.0052e-04 1.4144e-03 ------- ------- -----

15 -2.5315e-06 7.1762e-05 1.0079e-04 1.4144e-03 ------- ------- -----

16 -2.5386e-06 7.1952e-05 1.0105e-04 1.4144e-03 ------- ------- -----

17 -2.5458e-06 7.2144e-05 1.0132e-04 1.4144e-03 ------- ------- -----

18 -2.5529e-06 7.2337e-05 1.0159e-04 1.4144e-03 ------- ------- -----

19 -2.5602e-06 7.2531e-05 1.0187e-04 1.4144e-03 ------- ------- -----

20 -2.5674e-06 7.2726e-05 1.0214e-04 1.4144e-03 ------- ------- -----

21 -2.5747e-06 7.2922e-05 1.0241e-04 1.4144e-03 ------- ------- -----

22 -2.5820e-06 7.3119e-05 1.0269e-04 1.4144e-03 ------- ------- -----

23 -2.5894e-06 7.3316e-05 1.0297e-04 1.4144e-03 ------- ------- -----

24 -2.5968e-06 7.3515e-05 1.0325e-04 1.4144e-03 ------- ------- -----

25 -2.6043e-06 7.3715e-05 1.0353e-04 1.4144e-03 ------- ------- -----

26 -2.6118e-06 7.3916e-05 1.0381e-04 1.4144e-03 ------- ------- -----

27 -2.6193e-06 7.4119e-05 1.0409e-04 1.4144e-03 ------- ------- -----

28 -2.6269e-06 7.4322e-05 1.0438e-04 1.4144e-03 ------- ------- -----

29 -2.6345e-06 7.4526e-05 1.0467e-04 1.4144e-03 ------- ------- -----

30 -2.6422e-06 7.4731e-05 1.0495e-04 1.4144e-03 ------- ------- -----

31 -2.6499e-06 7.4938e-05 1.0524e-04 1.4144e-03 ------- ------- -----

32 -2.6576e-06 7.5146e-05 1.0553e-04 1.4144e-03 ------- ------- -----

33 -2.6654e-06 7.5354e-05 1.0583e-04 1.4144e-03 ------- ------- -----

34 -2.6733e-06 7.5564e-05 1.0612e-04 1.4144e-03 ------- ------- -----

35 -2.6811e-06 7.5775e-05 1.0642e-04 1.4144e-03 ------- ------- -----

36 -2.6891e-06 7.5987e-05 1.0672e-04 1.4144e-03 ------- ------- -----

37 -2.6970e-06 7.6201e-05 1.0701e-04 1.4144e-03 ------- ------- -----

38 -2.7050e-06 7.6415e-05 1.0732e-04 1.4144e-03 ------- ------- -----

39 -2.7131e-06 7.6631e-05 1.0762e-04 1.4144e-03 ------- ------- -----

40 -2.7212e-06 7.6847e-05 1.0792e-04 1.4144e-03 ------- ------- -----

41 -2.7293e-06 7.7065e-05 1.0823e-04 1.4144e-03 ------- ------- -----

42 -2.7375e-06 7.7285e-05 1.0854e-04 1.4144e-03 ------- ------- -----

43 -2.7458e-06 7.7505e-05 1.0884e-04 1.4144e-03 ------- ------- -----

44 -2.7541e-06 7.7727e-05 1.0916e-04 1.4144e-03 ------- ------- -----

45 -2.7624e-06 7.7949e-05 1.0947e-04 1.4144e-03 ------- ------- -----

46 -2.7708e-06 7.8174e-05 1.0978e-04 1.4144e-03 ------- ------- -----

47 -2.7792e-06 7.8399e-05 1.1010e-04 1.4144e-03 ------- ------- -----

48 -2.7877e-06 7.8625e-05 1.1042e-04 1.4144e-03 ------- ------- -----

49 -2.7962e-06 7.8853e-05 1.1074e-04 1.4144e-03 ------- ------- -----

Reached maximum number of iterations.

x* = [-0.06054287 -0.01247025]

Eigenvalues of Hessian at x* = [ 0.00145694+0.j -0.00207929+0.j]

***Case 8: Quasi-Newton method with BFGS Hessian approximation and line search***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.7116e-06 1.5723e-04 1.5723e-04 1.0000e+00 1.00e+00 ------- -----

1 1.6869e-06 1.5677e-04 5.3086e-02 2.9531e-03 1.00e+00 ------- -----

2 -2.3988e-06 7.2335e-05 4.0930e-03 2.7417e-03 1.00e+00 ------- -----

3 -2.4363e-06 7.1075e-05 3.7674e-03 1.5843e-03 1.00e+00 ------- -----

4 -2.4541e-06 7.0132e-05 1.4838e-03 1.4144e-03 1.00e+00 ------- -----

5 -2.4625e-06 6.9939e-05 1.3871e-04 1.4144e-03 1.00e+00 ------- -----

6 -2.4692e-06 7.0090e-05 9.6823e-05 1.4144e-03 1.00e+00 ------- -----

7 -2.4760e-06 7.0270e-05 9.8466e-05 1.4144e-03 1.00e+00 ------- -----

8 -2.4828e-06 7.0453e-05 9.8927e-05 1.4144e-03 1.00e+00 ------- -----

9 -2.4897e-06 7.0637e-05 9.9206e-05 1.4144e-03 1.00e+00 ------- -----

10 -2.4965e-06 7.0822e-05 9.9468e-05 1.4144e-03 1.00e+00 ------- -----

11 -2.5035e-06 7.1008e-05 9.9729e-05 1.4144e-03 1.00e+00 ------- -----

12 -2.5104e-06 7.1195e-05 9.9992e-05 1.4144e-03 1.00e+00 ------- -----

13 -2.5174e-06 7.1383e-05 1.0026e-04 1.4144e-03 1.00e+00 ------- -----

14 -2.5244e-06 7.1572e-05 1.0052e-04 1.4144e-03 1.00e+00 ------- -----

15 -2.5315e-06 7.1762e-05 1.0079e-04 1.4144e-03 1.00e+00 ------- -----

16 -2.5386e-06 7.1952e-05 1.0105e-04 1.4144e-03 1.00e+00 ------- -----

17 -2.5458e-06 7.2144e-05 1.0132e-04 1.4144e-03 1.00e+00 ------- -----

18 -2.5529e-06 7.2337e-05 1.0159e-04 1.4144e-03 1.00e+00 ------- -----

19 -2.5602e-06 7.2531e-05 1.0187e-04 1.4144e-03 1.00e+00 ------- -----

20 -2.5674e-06 7.2726e-05 1.0214e-04 1.4144e-03 1.00e+00 ------- -----

21 -2.5747e-06 7.2922e-05 1.0241e-04 1.4144e-03 1.00e+00 ------- -----

22 -2.5820e-06 7.3119e-05 1.0269e-04 1.4144e-03 1.00e+00 ------- -----

23 -2.5894e-06 7.3316e-05 1.0297e-04 1.4144e-03 1.00e+00 ------- -----

24 -2.5968e-06 7.3515e-05 1.0325e-04 1.4144e-03 1.00e+00 ------- -----

25 -2.6043e-06 7.3715e-05 1.0353e-04 1.4144e-03 1.00e+00 ------- -----

26 -2.6118e-06 7.3916e-05 1.0381e-04 1.4144e-03 1.00e+00 ------- -----

27 -2.6193e-06 7.4119e-05 1.0409e-04 1.4144e-03 1.00e+00 ------- -----

28 -2.6269e-06 7.4322e-05 1.0438e-04 1.4144e-03 1.00e+00 ------- -----

29 -2.6345e-06 7.4526e-05 1.0467e-04 1.4144e-03 1.00e+00 ------- -----

30 -2.6422e-06 7.4731e-05 1.0495e-04 1.4144e-03 1.00e+00 ------- -----

31 -2.6499e-06 7.4938e-05 1.0524e-04 1.4144e-03 1.00e+00 ------- -----

32 -2.6576e-06 7.5146e-05 1.0553e-04 1.4144e-03 1.00e+00 ------- -----

33 -2.6654e-06 7.5354e-05 1.0583e-04 1.4144e-03 1.00e+00 ------- -----

34 -2.6733e-06 7.5564e-05 1.0612e-04 1.4144e-03 1.00e+00 ------- -----

35 -2.6811e-06 7.5775e-05 1.0642e-04 1.4144e-03 1.00e+00 ------- -----

36 -2.6891e-06 7.5987e-05 1.0672e-04 1.4144e-03 1.00e+00 ------- -----

37 -2.6970e-06 7.6201e-05 1.0701e-04 1.4144e-03 1.00e+00 ------- -----

38 -2.7050e-06 7.6415e-05 1.0732e-04 1.4144e-03 1.00e+00 ------- -----

39 -2.7131e-06 7.6631e-05 1.0762e-04 1.4144e-03 1.00e+00 ------- -----

40 -2.7212e-06 7.6847e-05 1.0792e-04 1.4144e-03 1.00e+00 ------- -----

41 -2.7293e-06 7.7065e-05 1.0823e-04 1.4144e-03 1.00e+00 ------- -----

42 -2.7375e-06 7.7285e-05 1.0854e-04 1.4144e-03 1.00e+00 ------- -----

43 -2.7458e-06 7.7505e-05 1.0884e-04 1.4144e-03 1.00e+00 ------- -----

44 -2.7541e-06 7.7727e-05 1.0916e-04 1.4144e-03 1.00e+00 ------- -----

45 -2.7624e-06 7.7949e-05 1.0947e-04 1.4144e-03 1.00e+00 ------- -----

46 -2.7708e-06 7.8174e-05 1.0978e-04 1.4144e-03 1.00e+00 ------- -----

47 -2.7792e-06 7.8399e-05 1.1010e-04 1.4144e-03 1.00e+00 ------- -----

48 -2.7877e-06 7.8625e-05 1.1042e-04 1.4144e-03 1.00e+00 ------- -----

49 -2.7962e-06 7.8853e-05 1.1074e-04 1.4144e-03 1.00e+00 ------- -----

Reached maximum number of iterations.

x* = [-0.06054287 -0.01247025]

Eigenvalues of Hessian at x* = [ 0.00145694+0.j -0.00207929+0.j]

***Case 9: Quasi-Newton method with BFGS Hessian approximation and trust region***

Iter. f(x) ||grad(x)|| ||p|| min(eig(B)) alpha delta step

0 1.7116e-06 1.5723e-04 1.5723e-04 1.0000e+00 ------- 2.00e+00 accept

1 1.6869e-06 1.5677e-04 5.3086e-02 2.9531e-03 ------- 2.00e+00 accept

2 -2.3988e-06 7.2335e-05 4.0930e-03 2.7417e-03 ------- 2.00e+00 accept

3 -2.4363e-06 7.1075e-05 3.7674e-03 1.5843e-03 ------- 2.00e+00 accept

4 -2.4541e-06 7.0132e-05 1.4838e-03 1.4144e-03 ------- 2.00e+00 accept

5 -2.4625e-06 6.9939e-05 1.3871e-04 1.4144e-03 ------- 2.00e+00 accept

6 -2.4692e-06 7.0090e-05 9.6823e-05 1.4144e-03 ------- 2.00e+00 accept

7 -2.4760e-06 7.0270e-05 9.8466e-05 1.4144e-03 ------- 2.00e+00 accept

8 -2.4828e-06 7.0453e-05 9.8927e-05 1.4144e-03 ------- 2.00e+00 accept

9 -2.4897e-06 7.0637e-05 9.9206e-05 1.4144e-03 ------- 2.00e+00 accept

10 -2.4965e-06 7.0822e-05 9.9468e-05 1.4144e-03 ------- 2.00e+00 accept

11 -2.5035e-06 7.1008e-05 9.9729e-05 1.4144e-03 ------- 2.00e+00 accept

12 -2.5104e-06 7.1195e-05 9.9992e-05 1.4144e-03 ------- 2.00e+00 accept

13 -2.5174e-06 7.1383e-05 1.0026e-04 1.4144e-03 ------- 2.00e+00 accept

14 -2.5244e-06 7.1572e-05 1.0052e-04 1.4144e-03 ------- 2.00e+00 accept

15 -2.5315e-06 7.1762e-05 1.0079e-04 1.4144e-03 ------- 2.00e+00 accept

16 -2.5386e-06 7.1952e-05 1.0105e-04 1.4144e-03 ------- 2.00e+00 accept

17 -2.5458e-06 7.2144e-05 1.0132e-04 1.4144e-03 ------- 2.00e+00 accept

18 -2.5529e-06 7.2337e-05 1.0159e-04 1.4144e-03 ------- 2.00e+00 accept

19 -2.5602e-06 7.2531e-05 1.0187e-04 1.4144e-03 ------- 2.00e+00 accept

20 -2.5674e-06 7.2726e-05 1.0214e-04 1.4144e-03 ------- 2.00e+00 accept

21 -2.5747e-06 7.2922e-05 1.0241e-04 1.4144e-03 ------- 2.00e+00 accept

22 -2.5820e-06 7.3119e-05 1.0269e-04 1.4144e-03 ------- 2.00e+00 accept

23 -2.5894e-06 7.3316e-05 1.0297e-04 1.4144e-03 ------- 2.00e+00 accept

24 -2.5968e-06 7.3515e-05 1.0325e-04 1.4144e-03 ------- 2.00e+00 accept

25 -2.6043e-06 7.3715e-05 1.0353e-04 1.4144e-03 ------- 2.00e+00 accept

26 -2.6118e-06 7.3916e-05 1.0381e-04 1.4144e-03 ------- 2.00e+00 accept

27 -2.6193e-06 7.4119e-05 1.0409e-04 1.4144e-03 ------- 2.00e+00 accept

28 -2.6269e-06 7.4322e-05 1.0438e-04 1.4144e-03 ------- 2.00e+00 accept

29 -2.6345e-06 7.4526e-05 1.0467e-04 1.4144e-03 ------- 2.00e+00 accept

30 -2.6422e-06 7.4731e-05 1.0495e-04 1.4144e-03 ------- 2.00e+00 accept

31 -2.6499e-06 7.4938e-05 1.0524e-04 1.4144e-03 ------- 2.00e+00 accept

32 -2.6576e-06 7.5146e-05 1.0553e-04 1.4144e-03 ------- 2.00e+00 accept

33 -2.6654e-06 7.5354e-05 1.0583e-04 1.4144e-03 ------- 2.00e+00 accept

34 -2.6733e-06 7.5564e-05 1.0612e-04 1.4144e-03 ------- 2.00e+00 accept

35 -2.6811e-06 7.5775e-05 1.0642e-04 1.4144e-03 ------- 2.00e+00 accept

36 -2.6891e-06 7.5987e-05 1.0672e-04 1.4144e-03 ------- 2.00e+00 accept

37 -2.6970e-06 7.6201e-05 1.0701e-04 1.4144e-03 ------- 2.00e+00 accept

38 -2.7050e-06 7.6415e-05 1.0732e-04 1.4144e-03 ------- 2.00e+00 accept

39 -2.7131e-06 7.6631e-05 1.0762e-04 1.4144e-03 ------- 2.00e+00 accept

40 -2.7212e-06 7.6847e-05 1.0792e-04 1.4144e-03 ------- 2.00e+00 accept

41 -2.7293e-06 7.7065e-05 1.0823e-04 1.4144e-03 ------- 2.00e+00 accept

42 -2.7375e-06 7.7285e-05 1.0854e-04 1.4144e-03 ------- 2.00e+00 accept

43 -2.7458e-06 7.7505e-05 1.0884e-04 1.4144e-03 ------- 2.00e+00 accept