{

"cells": [

{

"cell_type": "markdown",

"id": "b6a5f3df",

"metadata": {},

"source": [

"\n",

"*This notebook contains material from [CBE60499](https://ndcbe.github.io/CBE60499);\n",

"content is available [on Github](git@github.com:ndcbe/CBE60499.git).*\n"

]

},

{

"cell_type": "markdown",

"id": "b1e06a6e",

"metadata": {},

"source": [

"\n",

"< [3.4 Newton-type Methods for Unconstrained Optimization](https://ndcbe.github.io/CBE60499/03.04-Netwon-Methods.html) | [Contents](toc.html) | [Tag Index](tag_index.html) | [3.6 Descent and Globalization](https://ndcbe.github.io/CBE60499/03.06-Globalization.html) >

"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 1,

"link": "[3.5 Quasi-Newton Methods for Unconstrained Optimization](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5-Quasi-Newton-Methods-for-Unconstrained-Optimization)",

"section": "3.5 Quasi-Newton Methods for Unconstrained Optimization"

}

},

"source": [

"# 3.5 Quasi-Newton Methods for Unconstrained Optimization\n",

"\n",

"**Reference**: Sections 3.1 - 3.3 in Biegler (2010)"

]

},

{

"cell_type": "code",

"execution_count": 1,

"metadata": {

"nbpages": {

"level": 1,

"link": "[3.5 Quasi-Newton Methods for Unconstrained Optimization](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5-Quasi-Newton-Methods-for-Unconstrained-Optimization)",

"section": "3.5 Quasi-Newton Methods for Unconstrained Optimization"

}

},

"outputs": [],

"source": [

"# Load required Python libraries.\n",

"import matplotlib.pyplot as plt\n",

"import numpy as np\n",

"from scipy import linalg"

]

},

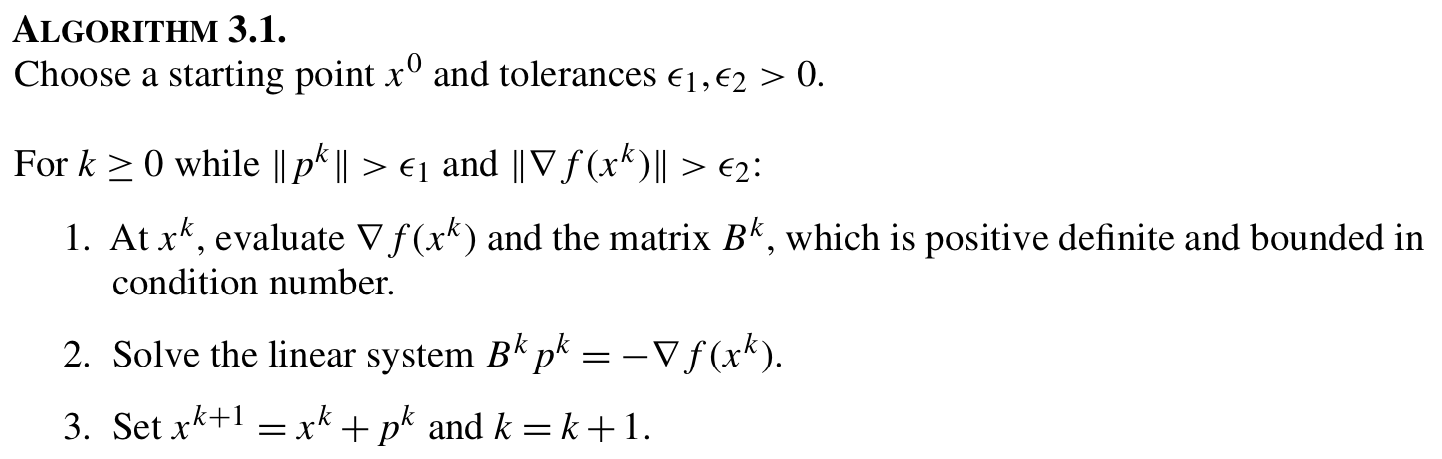

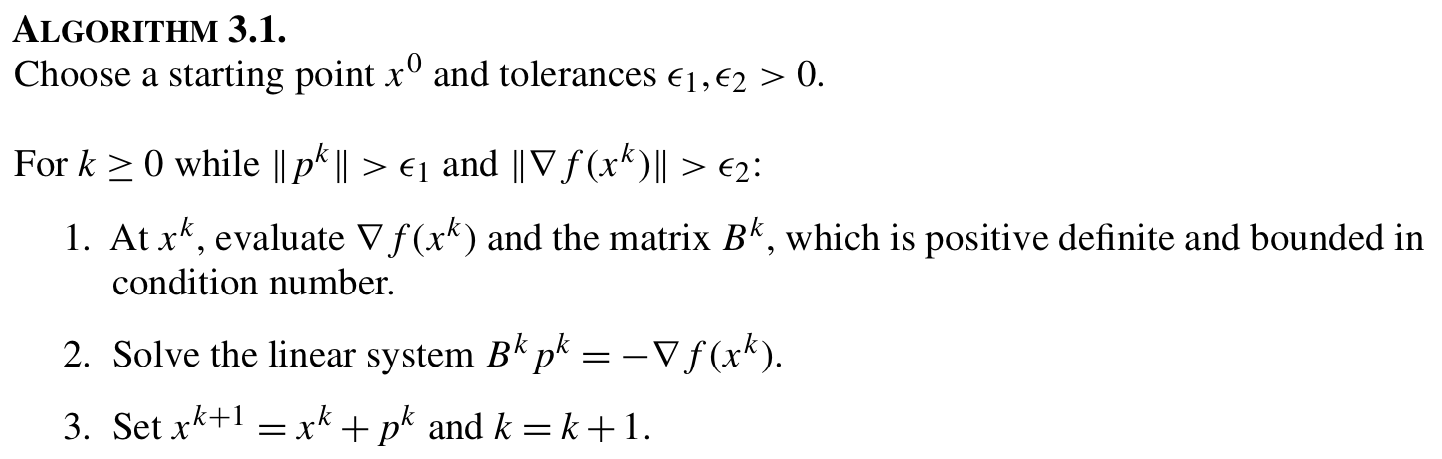

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.1 Unconstrained Optimization with Approximate Hessian](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.1-Unconstrained-Optimization-with-Approximate-Hessian)",

"section": "3.5.1 Unconstrained Optimization with Approximate Hessian"

}

},

"source": [

"## 3.5.1 Unconstrained Optimization with Approximate Hessian"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.1 Unconstrained Optimization with Approximate Hessian](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.1-Unconstrained-Optimization-with-Approximate-Hessian)",

"section": "3.5.1 Unconstrained Optimization with Approximate Hessian"

}

},

"source": [

"\n",

""

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.1 Unconstrained Optimization with Approximate Hessian](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.1-Unconstrained-Optimization-with-Approximate-Hessian)",

"section": "3.5.1 Unconstrained Optimization with Approximate Hessian"

}

},

"source": [

"SR1 update is one way to approximate $B^k$."

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.1.1 Library of helper functions](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.1.1-Library-of-helper-functions)",

"section": "3.5.1.1 Library of helper functions"

}

},

"source": [

"### 3.5.1.1 Library of helper functions"

]

},

{

"cell_type": "code",

"execution_count": 2,

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.1.1 Library of helper functions](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.1.1-Library-of-helper-functions)",

"section": "3.5.1.1 Library of helper functions"

}

},

"outputs": [],

"source": [

"## Check is element of array is NaN\n",

"def check_nan(A):\n",

" return np.sum(np.isnan(A))\n",

"\n",

"## Calculate gradient with central finite difference\n",

"def my_grad_approx(x,f,eps1,verbose=False):\n",

" '''\n",

" Calculate gradient of function my_f using central difference formula\n",

" \n",

" Inputs:\n",

" x - point for which to evaluate gradient\n",

" f - function to consider\n",

" eps1 - perturbation size\n",

" \n",

" Outputs:\n",

" grad - gradient (vector)\n",

" '''\n",

" \n",

" n = len(x)\n",

" grad = np.zeros(n)\n",

" \n",

" if(verbose):\n",

" print(\"***** my_grad_approx at x = \",x,\"*****\")\n",

" \n",

" for i in range(0,n):\n",

" \n",

" # Create vector of zeros except eps in position i\n",

" e = np.zeros(n)\n",

" e[i] = eps1\n",

" \n",

" # Finite difference formula\n",

" my_f_plus = f(x + e)\n",

" my_f_minus = f(x - e)\n",

" \n",

" # Diagnostics\n",

" if(verbose):\n",

" print(\"e[\",i,\"] = \",e)\n",

" print(\"f(x + e[\",i,\"]) = \",my_f_plus)\n",

" print(\"f(x - e[\",i,\"]) = \",my_f_minus)\n",

" \n",

" \n",

" grad[i] = (my_f_plus - my_f_minus)/(2*eps1)\n",

" \n",

" if(verbose):\n",

" print(\"***** Done. ***** \\n\")\n",

" \n",

" return grad\n",

"\n",

"## Calculate Hessian using cental finite difference\n",

"def my_hes_approx(x,grad,eps2):\n",

" '''\n",

" Calculate gradient of function my_f using central difference formula and my_grad\n",

" \n",

" Inputs:\n",

" x - point for which to evaluate gradient\n",

" grad - function to calculate the gradient\n",

" eps2 - perturbation size (for Hessian NOT gradient approximation)\n",

" \n",

" Outputs:\n",

" H - Hessian (matrix)\n",

" '''\n",

" \n",

" n = len(x)\n",

" H = np.zeros([n,n])\n",

" \n",

" for i in range(0,n):\n",

" # Create vector of zeros except eps in position i\n",

" e = np.zeros(n)\n",

" e[i] = eps2\n",

" \n",

" # Evaluate gradient twice\n",

" grad_plus = grad(x + e)\n",

" grad_minus = grad(x - e)\n",

" \n",

" # Notice we are building the Hessian by column (or row)\n",

" H[:,i] = (grad_plus - grad_minus)/(2*eps2)\n",

"\n",

" return H\n",

"\n",

"## Linear algebra calculation\n",

"def xxT(u):\n",

" '''\n",

" Calculates u*u.T to circumvent limitation with SciPy\n",

" \n",

" Arguments:\n",

" u - numpy 1D array\n",

" \n",

" Returns:\n",

" u*u.T\n",

" \n",

" Assume u is a nx1 vector.\n",

" Recall: NumPy does not distinguish between row or column vectors\n",

" \n",

" u.dot(u) returns a scalar. This functon returns an nxn matrix.\n",

" '''\n",

" \n",

" n = len(u)\n",

" A = np.zeros([n,n])\n",

" for i in range(0,n):\n",

" for j in range(0,n):\n",

" A[i,j] = u[i]*u[j]\n",

" \n",

" return A\n",

"\n",

"## Analyze Hessian\n",

"def analyze_hes(B):\n",

" print(B,\"\\n\")\n",

" \n",

" l = linalg.eigvals(B)\n",

" print(\"Eigenvalues: \",l,\"\\n\")\n"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.1.2 Symmetric Rank 1 (SR1) Update](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.1.2-Symmetric-Rank-1-(SR1)-Update)",

"section": "3.5.1.2 Symmetric Rank 1 (SR1) Update"

}

},

"source": [

"### 3.5.1.2 Symmetric Rank 1 (SR1) Update"

]

},

{

"cell_type": "code",

"execution_count": 3,

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.1.2 Symmetric Rank 1 (SR1) Update](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.1.2-Symmetric-Rank-1-(SR1)-Update)",

"section": "3.5.1.2 Symmetric Rank 1 (SR1) Update"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Done.\n"

]

}

],

"source": [

"def alg1_sr1(x0,calc_f,calc_grad,eps1,eps2,verbose=False,max_iter=1000):\n",

" '''\n",

" Arguments:\n",

" x0 - starting point\n",

" calc_f - funcation that calculates f(x)\n",

" calc_grad - function that calculates gradient(x)\n",

" \n",

" Outputs:\n",

" x - iteration history of x (decision variables)\n",

" f - iteration history of f(x) (objective value)\n",

" p - iteration history of p (steps)\n",

" B - Hessian approximation\n",

" '''\n",

" \n",

" # Allocate outputs as empty lists\n",

" x = []\n",

" f = []\n",

" p = []\n",

" grad = []\n",

" B = []\n",

" \n",

" # Store starting point\n",

" x.append(x0)\n",

" k = 0\n",

" \n",

" flag = True\n",

" \n",

" print(\"Iter. \\tf(x) \\t\\t||grad(x)|| \\t||p|| \\t\\tmin(lambda)\")\n",

" \n",

" while flag and k < max_iter:\n",

" # Evaluate f(x) at current iteration\n",

" f.append(calc_f(x[k]))\n",

" \n",

" # Evaluate gradient\n",

" grad.append(calc_grad(x[k]))\n",

" \n",

" if(check_nan(grad[k])):\n",

" print(\"WARNING: gradiant calculation returned NaN\")\n",

" break\n",

" \n",

" if verbose:\n",

" print(\"\\n\")\n",

" print(\"k = \",k)\n",

" print(\"x = \",x[k])\n",

" print(\"grad = \",grad[k])\n",

"\n",

" \n",

" # Update Hessian approximation\n",

" if k == 0:\n",

" # Initialize with identity\n",

" B.append(np.eye(len(x0)))\n",

"\n",

" else:\n",

" # Change in x\n",

" s = x[k] - x[k-1]\n",

"\n",

" # Change in gradient\n",

" y = grad[k] - grad[k-1]\n",

"\n",

" # SR1 formulation\n",

" u = y - B[k-1].dot(s)\n",

" denom = (u).dot(s)\n",

" \n",

" # Formula: dB = u * u.T / (u.T * s) if u is a column vector.\n",

" dB = xxT(u)/denom\n",

" \n",

" if(verbose):\n",

" print(\"s = \",s)\n",

" print(\"y = \",y)\n",

" print(\"SR1 update denominator, (y-B[k-1]*s).T*s = \",denom)\n",

" print(\"SR1 update u = \",u)\n",

" print(\"SR1 update u.T*u/(u.T*s) = \\n\",dB)\n",

" \n",

" B.append(B[k-1] + dB)\n",

"\n",

" if verbose:\n",

" print(\"B = \\n\",B[k])\n",

" \n",

" if(check_nan(B[k])):\n",

" print(\"WARNING: Hessian update returned NaN\")\n",

" break\n",

" \n",

" c = np.linalg.cond(B[k])\n",

" if c > 1E12:\n",

" flag = False\n",

" print(\"Warning: Hessian approximation is near singular.\")\n",

" print(\"B[k] = \\n\",B[k])\n",

" \n",

" else:\n",

" # Calculate step\n",

" p.append(linalg.solve(B[k],-grad[k]))\n",

"\n",

" if verbose:\n",

" print(\"p = \",p[k])\n",

"\n",

" # Take step\n",

" x.append(x[k] + p[k])\n",

"\n",

" # Calculate norms\n",

" norm_p = linalg.norm(p[k])\n",

" norm_g = linalg.norm(grad[k])\n",

"\n",

" # Calculate eigenvalues of Hessian (for display only)\n",

" ev = np.real(linalg.eigvals(B[k]))\n",

"\n",

" # print(\"k = \",k,\"\\t\"f[k],\"\\t\",norm_g,\"\\t\",norm_p)\n",

" print(k,' \\t{0: 1.4e} \\t{1:1.4e} \\t{2:1.4e} \\t{3: 1.4e}'.format(f[k],norm_g,norm_p,np.min(ev)))\n",

"\n",

" # Check convergence criteria\n",

" flag = (norm_p > eps1) and (norm_g > eps2)\n",

"\n",

" # Update iteration counter\n",

" k = k + 1\n",

" \n",

" print(\"Done.\")\n",

" print(\"x* = \",x[-1])\n",

" \n",

" return x,f,p,B\n",

"\n",

"print(\"Done.\")"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.2 Test Case: Simple quadratic program](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.2-Test-Case:-Simple-quadratic-program)",

"section": "3.5.2 Test Case: Simple quadratic program"

}

},

"source": [

"## 3.5.2 Test Case: Simple quadratic program\n",

"\n",

"$$\\min_x ~~ x_1^2 + (x_2 -1)^2$$"

]

},

{

"cell_type": "code",

"execution_count": 4,

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.2 Test Case: Simple quadratic program](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.2-Test-Case:-Simple-quadratic-program)",

"section": "3.5.2 Test Case: Simple quadratic program"

}

},

"outputs": [],

"source": [

"def my_f_simple(x):\n",

" return x[0]**2 + (x[1]-1)**2\n",

"\n",

"def my_grad_exact(x):\n",

" return np.array([2*x[0], 2*(x[1] - 1) ])"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.2.1 Near solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.2.1-Near-solution)",

"section": "3.5.2.1 Near solution"

}

},

"source": [

"### 3.5.2.1 Near solution\n",

"Consider $x_0 = [-0.1, 0.5]^T$"

]

},

{

"cell_type": "code",

"execution_count": 5,

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.2.1 Near solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.2.1-Near-solution)",

"section": "3.5.2.1 Near solution"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Iter. \tf(x) \t\t||grad(x)|| \t||p|| \t\tmin(lambda)\n",

"0 \t 6.5000e-01 \t1.6125e+00 \t1.6125e+00 \t 1.0000e+00\n",

"1 \t 6.5000e-01 \t1.6125e+00 \t8.0623e-01 \t 1.0000e+00\n",

"2 \t 1.9259e-34 \t2.7756e-17 \t2.7595e-17 \t 1.0000e+00\n",

"Done.\n",

"x* = [1.36642834e-17 1.00000000e+00]\n",

"\n",

"SR1 Hessian approximation. B[k] =\n",

"[[1.01538462 0.12307692]\n",

" [0.12307692 1.98461538]] \n",

"\n",

"Eigenvalues: [1.+0.j 2.+0.j] \n",

"\n",

"True Hessian at x*. B =\n",

"[[2. 0.]\n",

" [0. 2.]] \n",

"\n",

"Eigenvalues: [2.+0.j 2.+0.j] \n",

"\n"

]

}

],

"source": [

"# Specify convergence criteria\n",

"eps1 = 1E-8\n",

"eps2 = 1E-4\n",

"\n",

"# Create a Lambda (anonymous) function for gradient calculation\n",

"# calc_grad = lambda x : my_grad_approx(x,my_f_simple,1E-6,verbose=False)\n",

"calc_grad = lambda x : my_grad_exact(x)\n",

"\n",

"# Specify starting point\n",

"x0 = np.array([-0.1, 0.2])\n",

"\n",

"# Call optimization routine\n",

"x,f,p,B = alg1_sr1(x0,my_f_simple,calc_grad,eps1,eps2,verbose=False,max_iter=50);\n",

"\n",

"# SR1 Hessian approximation\n",

"print(\"\\nSR1 Hessian approximation. B[k] =\")\n",

"analyze_hes(B[-1])\n",

"\n",

"# Actual Hessian\n",

"print(\"True Hessian at x*. B =\")\n",

"analyze_hes(my_hes_approx(x[-1],calc_grad,1E-6))"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.2.2 Far from solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.2.2-Far-from-solution)",

"section": "3.5.2.2 Far from solution"

}

},

"source": [

"### 3.5.2.2 Far from solution\n",

"Consider $x_0 = [-100, 500]^T$"

]

},

{

"cell_type": "code",

"execution_count": 6,

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.2.2 Far from solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.2.2-Far-from-solution)",

"section": "3.5.2.2 Far from solution"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Iter. \tf(x) \t\t||grad(x)|| \t||p|| \t\tmin(lambda)\n",

"0 \t 2.5900e+05 \t1.0178e+03 \t1.0178e+03 \t 1.0000e+00\n",

"1 \t 2.5900e+05 \t1.0178e+03 \t5.0892e+02 \t 1.0000e+00\n",

"2 \t 3.4331e-27 \t1.1719e-13 \t7.2963e-14 \t 1.0000e+00\n",

"Done.\n",

"x* = [2.46138186e-14 1.00000000e+00]\n",

"\n",

"SR1 Hessian approximation. B[k] =\n",

"[[ 1.03860989 -0.19266335]\n",

" [-0.19266335 1.96139011]] \n",

"\n",

"Eigenvalues: [1.+0.j 2.+0.j] \n",

"\n",

"True Hessian at x*. B =\n",

"[[2. 0.]\n",

" [0. 2.]] \n",

"\n",

"Eigenvalues: [2.+0.j 2.+0.j] \n",

"\n"

]

}

],

"source": [

"# Specify starting point\n",

"x0 = np.array([-100, 500])\n",

"\n",

"# Call optimization routine\n",

"x,f,p,B = alg1_sr1(x0,my_f_simple,calc_grad,eps1,eps2,verbose=False,max_iter=50);\n",

"\n",

"# SR1 Hessian approximation\n",

"print(\"\\nSR1 Hessian approximation. B[k] =\")\n",

"analyze_hes(B[-1])\n",

"\n",

"# Actual Hessian\n",

"print(\"True Hessian at x*. B =\")\n",

"analyze_hes(my_hes_approx(x[-1],calc_grad,1E-6))"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.2.3 Activity/Discussion](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.2.3-Activity/Discussion)",

"section": "3.5.2.3 Activity/Discussion"

}

},

"source": [

"### 3.5.2.3 Activity/Discussion\n",

"* Does the number of iterations depend on the starting point for this problem?\n",

"* How many iterations are needed for Newton's method to converge for a positive definite quadratic program using exact second derivative information?\n",

"* Why does the SR1 update not converge to the true Hessian?"

]

},

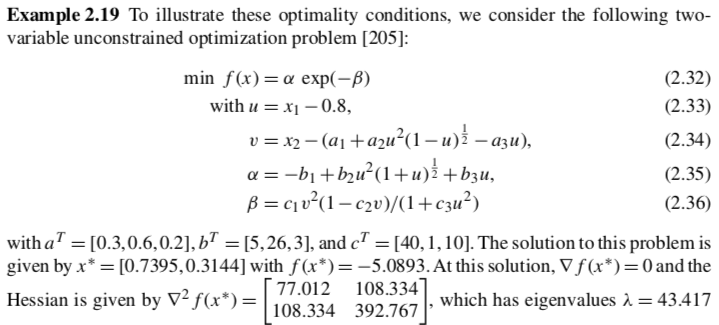

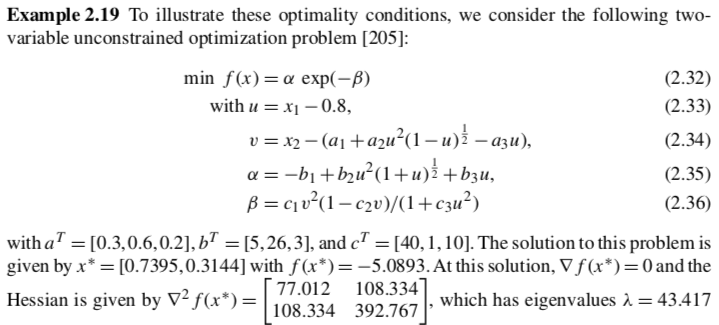

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.3 Test Case: Example 2.19](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.3-Test-Case:-Example-2.19)",

"section": "3.5.3 Test Case: Example 2.19"

}

},

"source": [

"## 3.5.3 Test Case: Example 2.19\n",

"\n",

""

]

},

{

"cell_type": "code",

"execution_count": 7,

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.3 Test Case: Example 2.19](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.3-Test-Case:-Example-2.19)",

"section": "3.5.3 Test Case: Example 2.19"

}

},

"outputs": [],

"source": [

"## Define Python function to calculate objective\n",

"def my_f_ex_2_19(x,verbose=False):\n",

" ''' Evaluate function given above at point x\n",

"\n",

" Inputs:\n",

" x - vector with 2 elements\n",

" \n",

" Outputs:\n",

" f - function value (scalar)\n",

" '''\n",

" # Constants\n",

" a = np.array([0.3, 0.6, 0.2])\n",

" b = np.array([5, 26, 3])\n",

" c = np.array([40, 1, 10])\n",

" \n",

" # Intermediates. Recall Python indicies start at 0\n",

" u = x[0] - 0.8\n",

" s = np.sqrt(1-u)\n",

" s2 = np.sqrt(1+u)\n",

" v = x[1] -(a[0] + a[1]*u**2*s-a[2]*u)\n",

" alpha = -b[0] + b[1]*u**2*s2+ b[2]*u # September 5, 2018: changed 's' to 's2'\n",

" beta = c[0]*v**2*(1-c[1]*v)/(1+c[2]*u**2)\n",

" f = alpha*np.exp(-beta)\n",

" \n",

" if verbose:\n",

" print(\"##### my_f at x = \",x, \"#####\")\n",

" print(\"u = \",u)\n",

" print(\"sqrt(1-u) = \",s)\n",

" print(\"sqrt(1+u) = \",s2)\n",

" print(\"v = \",v)\n",

" print(\"alpha = \",alpha)\n",

" print(\"beta = \",beta)\n",

" print(\"f(x) = \",f)\n",

" print(\"##### Done. #####\\n\")\n",

" \n",

" return f"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.3.1 $x_0$ somewhat near solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.3.1-$x_0$-somewhat-near-solution)",

"section": "3.5.3.1 $x_0$ somewhat near solution"

}

},

"source": [

"### 3.5.3.1 $x_0$ somewhat near solution\n",

"\n",

"Consider:\n",

"$$x_0 = [0.3, 0.1]^T$$"

]

},

{

"cell_type": "code",

"execution_count": 8,

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.3.1 $x_0$ somewhat near solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.3.1-$x_0$-somewhat-near-solution)",

"section": "3.5.3.1 $x_0$ somewhat near solution"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Iter. \tf(x) \t\t||grad(x)|| \t||p|| \t\tmin(lambda)\n",

"0 \t-3.6022e-02 \t8.3847e-01 \t8.3847e-01 \t 1.0000e+00\n",

"1 \t-4.1276e-02 \t4.7785e-01 \t3.0223e-01 \t 1.0000e+00\n",

"2 \t-2.2301e+00 \t2.1236e+01 \t3.0865e-01 \t-6.8856e+01\n",

"3 \t-4.1260e-02 \t4.4645e-01 \t1.3053e-01 \t-6.7478e+01\n",

"4 \t-1.0354e-01 \t1.1855e+00 \t1.5062e-01 \t-7.1805e+01\n",

"5 \t-3.7115e-02 \t3.9982e-01 \t1.3096e-02 \t-5.8783e+01\n",

"6 \t-3.4039e-02 \t3.6164e-01 \t5.9865e-02 \t-1.4365e+01\n",

"7 \t-2.2398e-02 \t2.0377e-01 \t4.2988e-02 \t-9.5209e+00\n",

"8 \t-1.7133e-02 \t1.1914e-01 \t4.2522e-02 \t-6.4394e+00\n",

"9 \t-1.4292e-02 \t5.7282e-02 \t2.4810e-02 \t-4.8015e+00\n",

"10 \t-1.3542e-02 \t2.5813e-02 \t1.0353e-02 \t-2.8612e+00\n",

"11 \t-1.3373e-02 \t1.0933e-02 \t8.4091e-03 \t-1.6117e+00\n",

"12 \t-1.3325e-02 \t7.7765e-04 \t7.5906e-04 \t-1.6030e+00\n",

"13 \t-1.3324e-02 \t1.7106e-05 \t1.4129e-05 \t-1.5761e+00\n",

"Done.\n",

"x* = [0.80557705 0.96556999]\n",

"\n",

"SR1 Hessian approximation. B[k] =\n",

"[[-1.48333926 -0.21589307]\n",

" [-0.21589307 -1.07333656]] \n",

"\n",

"Eigenvalues: [-1.57605484+0.j -0.98062097+0.j] \n",

"\n",

"True Hessian at x*. B =\n",

"[[-1.47002939 -0.20602227]\n",

" [-0.20602227 -1.06561938]] \n",

"\n",

"Eigenvalues: [-1.55649728+0.j -0.9791515 +0.j] \n",

"\n"

]

}

],

"source": [

"# Create a Lambda (anonymous) function for gradient calculation\n",

"calc_grad = lambda x : my_grad_approx(x,my_f_ex_2_19,1E-6,verbose=False)\n",

"\n",

"# Specify starting point\n",

"x0 = np.array([0.3, 0.1])\n",

"\n",

"# Call optimization routine\n",

"x,f,p,B = alg1_sr1(x0,my_f_ex_2_19,calc_grad,eps1,eps2,verbose=False,max_iter=250);\n",

"\n",

"# SR1 Hessian approximation\n",

"print(\"\\nSR1 Hessian approximation. B[k] =\")\n",

"analyze_hes(B[-1])\n",

"\n",

"# Actual Hessian\n",

"print(\"True Hessian at x*. B =\")\n",

"analyze_hes(my_hes_approx(x[-1],calc_grad,1E-6))"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.3.2 $x_0$ far from solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.3.2-$x_0$-far-from-solution)",

"section": "3.5.3.2 $x_0$ far from solution"

}

},

"source": [

"### 3.5.3.2 $x_0$ far from solution\n",

"\n",

"Consider:\n",

"$$x_0 = [-0.1, 0.2]^T$$"

]

},

{

"cell_type": "code",

"execution_count": 9,

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.3.2 $x_0$ far from solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.3.2-$x_0$-far-from-solution)",

"section": "3.5.3.2 $x_0$ far from solution"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Iter. \tf(x) \t\t||grad(x)|| \t||p|| \t\tmin(lambda)\n",

"0 \t-4.5540e-04 \t9.2580e-03 \t9.2580e-03 \t 1.0000e+00\n",

"1 \t-5.4879e-04 \t1.0959e-02 \t5.9578e-02 \t-1.8395e-01\n",

"2 \t-1.5604e-04 \t3.4877e-03 \t2.7627e-02 \t-1.2568e-01\n",

"3 \t-8.3235e-05 \t1.9563e-03 \t3.5000e-02 \t-5.5390e-02\n",

"4 \t-3.6006e-05 \t9.0023e-04 \t2.9559e-02 \t-3.0011e-02\n",

"5 \t-1.7097e-05 \t4.4964e-04 \t2.9246e-02 \t-1.5078e-02\n",

"6 \t-7.9092e-06 \t2.1835e-04 \t2.7389e-02 \t-7.7836e-03\n",

"7 \t-3.7242e-06 \t1.0745e-04 \t2.6349e-02 \t-3.9664e-03\n",

"8 \t-1.7531e-06 \t5.2702e-05 \t2.5208e-02 \t-2.0268e-03\n",

"Done.\n",

"x* = [-0.11328951 -0.05033867]\n",

"\n",

"SR1 Hessian approximation. B[k] =\n",

"[[ 1.00141633e+00 -4.82365647e-02]\n",

" [-4.82365647e-02 2.91945446e-04]] \n",

"\n",

"Eigenvalues: [ 1.00373512+0.j -0.00202684+0.j] \n",

"\n",

"True Hessian at x*. B =\n",

"[[ 6.99115848e-05 -2.18301460e-04]\n",

" [-2.18301460e-04 -7.02712139e-04]] \n",

"\n",

"Eigenvalues: [ 0.00012733+0.j -0.00076013+0.j] \n",

"\n"

]

}

],

"source": [

"# Specify starting point\n",

"x0 = np.array([-0.1, 0.2])\n",

"\n",

"# Call optimization routine\n",

"x,f,p,B = alg1_sr1(x0,my_f_ex_2_19,calc_grad,eps1,eps2,verbose=False,max_iter=250);\n",

"\n",

"# SR1 Hessian approximation\n",

"print(\"\\nSR1 Hessian approximation. B[k] =\")\n",

"analyze_hes(B[-1])\n",

"\n",

"# Actual Hessian\n",

"print(\"True Hessian at x*. B =\")\n",

"analyze_hes(my_hes_approx(x[-1],calc_grad,1E-6))"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.3.3 Activity/Discussion](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.3.3-Activity/Discussion)",

"section": "3.5.3.3 Activity/Discussion"

}

},

"source": [

"### 3.5.3.3 Activity/Discussion\n",

"* Classify each candidate solution.\n",

"* Is the SR1 approximation always positive definite?"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.4 Broyden update with Cholesky factorization](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.4-Broyden-update-with-Cholesky-factorization)",

"section": "3.5.4 Broyden update with Cholesky factorization"

}

},

"source": [

"## 3.5.4 Broyden update with Cholesky factorization\n",

"As part of Algorithms Homework 3, you will adapt this example and implement the BFGS (Broyden-Fletcher-Goldfarb-Shanno) update formula. \n",

"\n",

"\n",

"You may decide to use the Cholesky factorization of $B^{k}$,\n",

"\n",

"$$B^{k} = L^{k} (L^{k})^T,$$\n",

"\n",

"to make your BFGS update more efficient. (This is not required). Let's see how to do Cholseky factorization with SciPy."

]

},

{

"cell_type": "code",

"execution_count": 10,

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.4 Broyden update with Cholesky factorization](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.4-Broyden-update-with-Cholesky-factorization)",

"section": "3.5.4 Broyden update with Cholesky factorization"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"B = \n",

" [[ 1.00098448 0.00242031 -0.01329946]\n",

" [ 0.00242031 1.00595022 -0.03269612]\n",

" [-0.01329946 -0.03269612 1.17966326]] \n",

"\n",

"L = \n",

" [[ 1.00049212 0. 0. ]\n",

" [ 0.00241912 1.00296778 0. ]\n",

" [-0.01329292 -0.03256731 1.08555328]] \n",

"\n",

"L*L.T = \n",

" [[ 1.00098448 0.00242031 -0.01329946]\n",

" [ 0.00242031 1.00595022 -0.03269612]\n",

" [-0.01329946 -0.03269612 1.17966326]] \n",

"\n"

]

}

],

"source": [

"## Create random P.D. and symmetric matrix\n",

"B_ = np.eye(3) + xxT(np.random.normal(0,1,3))\n",

"print(\"B = \\n\",B_,\"\\n\")\n",

"\n",

"## Perform Cholesky factorization. \n",

"# By default, lower=False and L is upper triangular. Either works here,\n",

"# but we prefer L to be lower triangular for convention.\n",

"L = linalg.cholesky(B_,lower=True)\n",

"print(\"L = \\n\",L,\"\\n\")\n",

"\n",

"## Reconstruct B\n",

"print(\"L*L.T = \\n\",L.dot(L.T),\"\\n\")"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.4 Broyden update with Cholesky factorization](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.4-Broyden-update-with-Cholesky-factorization)",

"section": "3.5.4 Broyden update with Cholesky factorization"

}

},

"outputs": [],

"source": []

},

{

"cell_type": "markdown",

"id": "264f8875",

"metadata": {},

"source": [

"\n",

"< [3.4 Newton-type Methods for Unconstrained Optimization](https://ndcbe.github.io/CBE60499/03.04-Netwon-Methods.html) | [Contents](toc.html) | [Tag Index](tag_index.html) | [3.6 Descent and Globalization](https://ndcbe.github.io/CBE60499/03.06-Globalization.html) >

"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 1,

"link": "[3.5 Quasi-Newton Methods for Unconstrained Optimization](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5-Quasi-Newton-Methods-for-Unconstrained-Optimization)",

"section": "3.5 Quasi-Newton Methods for Unconstrained Optimization"

}

},

"source": [

"# 3.5 Quasi-Newton Methods for Unconstrained Optimization\n",

"\n",

"**Reference**: Sections 3.1 - 3.3 in Biegler (2010)"

]

},

{

"cell_type": "code",

"execution_count": 1,

"metadata": {

"nbpages": {

"level": 1,

"link": "[3.5 Quasi-Newton Methods for Unconstrained Optimization](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5-Quasi-Newton-Methods-for-Unconstrained-Optimization)",

"section": "3.5 Quasi-Newton Methods for Unconstrained Optimization"

}

},

"outputs": [],

"source": [

"# Load required Python libraries.\n",

"import matplotlib.pyplot as plt\n",

"import numpy as np\n",

"from scipy import linalg"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.1 Unconstrained Optimization with Approximate Hessian](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.1-Unconstrained-Optimization-with-Approximate-Hessian)",

"section": "3.5.1 Unconstrained Optimization with Approximate Hessian"

}

},

"source": [

"## 3.5.1 Unconstrained Optimization with Approximate Hessian"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.1 Unconstrained Optimization with Approximate Hessian](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.1-Unconstrained-Optimization-with-Approximate-Hessian)",

"section": "3.5.1 Unconstrained Optimization with Approximate Hessian"

}

},

"source": [

"\n",

""

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.1 Unconstrained Optimization with Approximate Hessian](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.1-Unconstrained-Optimization-with-Approximate-Hessian)",

"section": "3.5.1 Unconstrained Optimization with Approximate Hessian"

}

},

"source": [

"SR1 update is one way to approximate $B^k$."

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.1.1 Library of helper functions](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.1.1-Library-of-helper-functions)",

"section": "3.5.1.1 Library of helper functions"

}

},

"source": [

"### 3.5.1.1 Library of helper functions"

]

},

{

"cell_type": "code",

"execution_count": 2,

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.1.1 Library of helper functions](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.1.1-Library-of-helper-functions)",

"section": "3.5.1.1 Library of helper functions"

}

},

"outputs": [],

"source": [

"## Check is element of array is NaN\n",

"def check_nan(A):\n",

" return np.sum(np.isnan(A))\n",

"\n",

"## Calculate gradient with central finite difference\n",

"def my_grad_approx(x,f,eps1,verbose=False):\n",

" '''\n",

" Calculate gradient of function my_f using central difference formula\n",

" \n",

" Inputs:\n",

" x - point for which to evaluate gradient\n",

" f - function to consider\n",

" eps1 - perturbation size\n",

" \n",

" Outputs:\n",

" grad - gradient (vector)\n",

" '''\n",

" \n",

" n = len(x)\n",

" grad = np.zeros(n)\n",

" \n",

" if(verbose):\n",

" print(\"***** my_grad_approx at x = \",x,\"*****\")\n",

" \n",

" for i in range(0,n):\n",

" \n",

" # Create vector of zeros except eps in position i\n",

" e = np.zeros(n)\n",

" e[i] = eps1\n",

" \n",

" # Finite difference formula\n",

" my_f_plus = f(x + e)\n",

" my_f_minus = f(x - e)\n",

" \n",

" # Diagnostics\n",

" if(verbose):\n",

" print(\"e[\",i,\"] = \",e)\n",

" print(\"f(x + e[\",i,\"]) = \",my_f_plus)\n",

" print(\"f(x - e[\",i,\"]) = \",my_f_minus)\n",

" \n",

" \n",

" grad[i] = (my_f_plus - my_f_minus)/(2*eps1)\n",

" \n",

" if(verbose):\n",

" print(\"***** Done. ***** \\n\")\n",

" \n",

" return grad\n",

"\n",

"## Calculate Hessian using cental finite difference\n",

"def my_hes_approx(x,grad,eps2):\n",

" '''\n",

" Calculate gradient of function my_f using central difference formula and my_grad\n",

" \n",

" Inputs:\n",

" x - point for which to evaluate gradient\n",

" grad - function to calculate the gradient\n",

" eps2 - perturbation size (for Hessian NOT gradient approximation)\n",

" \n",

" Outputs:\n",

" H - Hessian (matrix)\n",

" '''\n",

" \n",

" n = len(x)\n",

" H = np.zeros([n,n])\n",

" \n",

" for i in range(0,n):\n",

" # Create vector of zeros except eps in position i\n",

" e = np.zeros(n)\n",

" e[i] = eps2\n",

" \n",

" # Evaluate gradient twice\n",

" grad_plus = grad(x + e)\n",

" grad_minus = grad(x - e)\n",

" \n",

" # Notice we are building the Hessian by column (or row)\n",

" H[:,i] = (grad_plus - grad_minus)/(2*eps2)\n",

"\n",

" return H\n",

"\n",

"## Linear algebra calculation\n",

"def xxT(u):\n",

" '''\n",

" Calculates u*u.T to circumvent limitation with SciPy\n",

" \n",

" Arguments:\n",

" u - numpy 1D array\n",

" \n",

" Returns:\n",

" u*u.T\n",

" \n",

" Assume u is a nx1 vector.\n",

" Recall: NumPy does not distinguish between row or column vectors\n",

" \n",

" u.dot(u) returns a scalar. This functon returns an nxn matrix.\n",

" '''\n",

" \n",

" n = len(u)\n",

" A = np.zeros([n,n])\n",

" for i in range(0,n):\n",

" for j in range(0,n):\n",

" A[i,j] = u[i]*u[j]\n",

" \n",

" return A\n",

"\n",

"## Analyze Hessian\n",

"def analyze_hes(B):\n",

" print(B,\"\\n\")\n",

" \n",

" l = linalg.eigvals(B)\n",

" print(\"Eigenvalues: \",l,\"\\n\")\n"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.1.2 Symmetric Rank 1 (SR1) Update](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.1.2-Symmetric-Rank-1-(SR1)-Update)",

"section": "3.5.1.2 Symmetric Rank 1 (SR1) Update"

}

},

"source": [

"### 3.5.1.2 Symmetric Rank 1 (SR1) Update"

]

},

{

"cell_type": "code",

"execution_count": 3,

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.1.2 Symmetric Rank 1 (SR1) Update](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.1.2-Symmetric-Rank-1-(SR1)-Update)",

"section": "3.5.1.2 Symmetric Rank 1 (SR1) Update"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Done.\n"

]

}

],

"source": [

"def alg1_sr1(x0,calc_f,calc_grad,eps1,eps2,verbose=False,max_iter=1000):\n",

" '''\n",

" Arguments:\n",

" x0 - starting point\n",

" calc_f - funcation that calculates f(x)\n",

" calc_grad - function that calculates gradient(x)\n",

" \n",

" Outputs:\n",

" x - iteration history of x (decision variables)\n",

" f - iteration history of f(x) (objective value)\n",

" p - iteration history of p (steps)\n",

" B - Hessian approximation\n",

" '''\n",

" \n",

" # Allocate outputs as empty lists\n",

" x = []\n",

" f = []\n",

" p = []\n",

" grad = []\n",

" B = []\n",

" \n",

" # Store starting point\n",

" x.append(x0)\n",

" k = 0\n",

" \n",

" flag = True\n",

" \n",

" print(\"Iter. \\tf(x) \\t\\t||grad(x)|| \\t||p|| \\t\\tmin(lambda)\")\n",

" \n",

" while flag and k < max_iter:\n",

" # Evaluate f(x) at current iteration\n",

" f.append(calc_f(x[k]))\n",

" \n",

" # Evaluate gradient\n",

" grad.append(calc_grad(x[k]))\n",

" \n",

" if(check_nan(grad[k])):\n",

" print(\"WARNING: gradiant calculation returned NaN\")\n",

" break\n",

" \n",

" if verbose:\n",

" print(\"\\n\")\n",

" print(\"k = \",k)\n",

" print(\"x = \",x[k])\n",

" print(\"grad = \",grad[k])\n",

"\n",

" \n",

" # Update Hessian approximation\n",

" if k == 0:\n",

" # Initialize with identity\n",

" B.append(np.eye(len(x0)))\n",

"\n",

" else:\n",

" # Change in x\n",

" s = x[k] - x[k-1]\n",

"\n",

" # Change in gradient\n",

" y = grad[k] - grad[k-1]\n",

"\n",

" # SR1 formulation\n",

" u = y - B[k-1].dot(s)\n",

" denom = (u).dot(s)\n",

" \n",

" # Formula: dB = u * u.T / (u.T * s) if u is a column vector.\n",

" dB = xxT(u)/denom\n",

" \n",

" if(verbose):\n",

" print(\"s = \",s)\n",

" print(\"y = \",y)\n",

" print(\"SR1 update denominator, (y-B[k-1]*s).T*s = \",denom)\n",

" print(\"SR1 update u = \",u)\n",

" print(\"SR1 update u.T*u/(u.T*s) = \\n\",dB)\n",

" \n",

" B.append(B[k-1] + dB)\n",

"\n",

" if verbose:\n",

" print(\"B = \\n\",B[k])\n",

" \n",

" if(check_nan(B[k])):\n",

" print(\"WARNING: Hessian update returned NaN\")\n",

" break\n",

" \n",

" c = np.linalg.cond(B[k])\n",

" if c > 1E12:\n",

" flag = False\n",

" print(\"Warning: Hessian approximation is near singular.\")\n",

" print(\"B[k] = \\n\",B[k])\n",

" \n",

" else:\n",

" # Calculate step\n",

" p.append(linalg.solve(B[k],-grad[k]))\n",

"\n",

" if verbose:\n",

" print(\"p = \",p[k])\n",

"\n",

" # Take step\n",

" x.append(x[k] + p[k])\n",

"\n",

" # Calculate norms\n",

" norm_p = linalg.norm(p[k])\n",

" norm_g = linalg.norm(grad[k])\n",

"\n",

" # Calculate eigenvalues of Hessian (for display only)\n",

" ev = np.real(linalg.eigvals(B[k]))\n",

"\n",

" # print(\"k = \",k,\"\\t\"f[k],\"\\t\",norm_g,\"\\t\",norm_p)\n",

" print(k,' \\t{0: 1.4e} \\t{1:1.4e} \\t{2:1.4e} \\t{3: 1.4e}'.format(f[k],norm_g,norm_p,np.min(ev)))\n",

"\n",

" # Check convergence criteria\n",

" flag = (norm_p > eps1) and (norm_g > eps2)\n",

"\n",

" # Update iteration counter\n",

" k = k + 1\n",

" \n",

" print(\"Done.\")\n",

" print(\"x* = \",x[-1])\n",

" \n",

" return x,f,p,B\n",

"\n",

"print(\"Done.\")"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.2 Test Case: Simple quadratic program](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.2-Test-Case:-Simple-quadratic-program)",

"section": "3.5.2 Test Case: Simple quadratic program"

}

},

"source": [

"## 3.5.2 Test Case: Simple quadratic program\n",

"\n",

"$$\\min_x ~~ x_1^2 + (x_2 -1)^2$$"

]

},

{

"cell_type": "code",

"execution_count": 4,

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.2 Test Case: Simple quadratic program](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.2-Test-Case:-Simple-quadratic-program)",

"section": "3.5.2 Test Case: Simple quadratic program"

}

},

"outputs": [],

"source": [

"def my_f_simple(x):\n",

" return x[0]**2 + (x[1]-1)**2\n",

"\n",

"def my_grad_exact(x):\n",

" return np.array([2*x[0], 2*(x[1] - 1) ])"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.2.1 Near solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.2.1-Near-solution)",

"section": "3.5.2.1 Near solution"

}

},

"source": [

"### 3.5.2.1 Near solution\n",

"Consider $x_0 = [-0.1, 0.5]^T$"

]

},

{

"cell_type": "code",

"execution_count": 5,

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.2.1 Near solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.2.1-Near-solution)",

"section": "3.5.2.1 Near solution"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Iter. \tf(x) \t\t||grad(x)|| \t||p|| \t\tmin(lambda)\n",

"0 \t 6.5000e-01 \t1.6125e+00 \t1.6125e+00 \t 1.0000e+00\n",

"1 \t 6.5000e-01 \t1.6125e+00 \t8.0623e-01 \t 1.0000e+00\n",

"2 \t 1.9259e-34 \t2.7756e-17 \t2.7595e-17 \t 1.0000e+00\n",

"Done.\n",

"x* = [1.36642834e-17 1.00000000e+00]\n",

"\n",

"SR1 Hessian approximation. B[k] =\n",

"[[1.01538462 0.12307692]\n",

" [0.12307692 1.98461538]] \n",

"\n",

"Eigenvalues: [1.+0.j 2.+0.j] \n",

"\n",

"True Hessian at x*. B =\n",

"[[2. 0.]\n",

" [0. 2.]] \n",

"\n",

"Eigenvalues: [2.+0.j 2.+0.j] \n",

"\n"

]

}

],

"source": [

"# Specify convergence criteria\n",

"eps1 = 1E-8\n",

"eps2 = 1E-4\n",

"\n",

"# Create a Lambda (anonymous) function for gradient calculation\n",

"# calc_grad = lambda x : my_grad_approx(x,my_f_simple,1E-6,verbose=False)\n",

"calc_grad = lambda x : my_grad_exact(x)\n",

"\n",

"# Specify starting point\n",

"x0 = np.array([-0.1, 0.2])\n",

"\n",

"# Call optimization routine\n",

"x,f,p,B = alg1_sr1(x0,my_f_simple,calc_grad,eps1,eps2,verbose=False,max_iter=50);\n",

"\n",

"# SR1 Hessian approximation\n",

"print(\"\\nSR1 Hessian approximation. B[k] =\")\n",

"analyze_hes(B[-1])\n",

"\n",

"# Actual Hessian\n",

"print(\"True Hessian at x*. B =\")\n",

"analyze_hes(my_hes_approx(x[-1],calc_grad,1E-6))"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.2.2 Far from solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.2.2-Far-from-solution)",

"section": "3.5.2.2 Far from solution"

}

},

"source": [

"### 3.5.2.2 Far from solution\n",

"Consider $x_0 = [-100, 500]^T$"

]

},

{

"cell_type": "code",

"execution_count": 6,

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.2.2 Far from solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.2.2-Far-from-solution)",

"section": "3.5.2.2 Far from solution"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Iter. \tf(x) \t\t||grad(x)|| \t||p|| \t\tmin(lambda)\n",

"0 \t 2.5900e+05 \t1.0178e+03 \t1.0178e+03 \t 1.0000e+00\n",

"1 \t 2.5900e+05 \t1.0178e+03 \t5.0892e+02 \t 1.0000e+00\n",

"2 \t 3.4331e-27 \t1.1719e-13 \t7.2963e-14 \t 1.0000e+00\n",

"Done.\n",

"x* = [2.46138186e-14 1.00000000e+00]\n",

"\n",

"SR1 Hessian approximation. B[k] =\n",

"[[ 1.03860989 -0.19266335]\n",

" [-0.19266335 1.96139011]] \n",

"\n",

"Eigenvalues: [1.+0.j 2.+0.j] \n",

"\n",

"True Hessian at x*. B =\n",

"[[2. 0.]\n",

" [0. 2.]] \n",

"\n",

"Eigenvalues: [2.+0.j 2.+0.j] \n",

"\n"

]

}

],

"source": [

"# Specify starting point\n",

"x0 = np.array([-100, 500])\n",

"\n",

"# Call optimization routine\n",

"x,f,p,B = alg1_sr1(x0,my_f_simple,calc_grad,eps1,eps2,verbose=False,max_iter=50);\n",

"\n",

"# SR1 Hessian approximation\n",

"print(\"\\nSR1 Hessian approximation. B[k] =\")\n",

"analyze_hes(B[-1])\n",

"\n",

"# Actual Hessian\n",

"print(\"True Hessian at x*. B =\")\n",

"analyze_hes(my_hes_approx(x[-1],calc_grad,1E-6))"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.2.3 Activity/Discussion](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.2.3-Activity/Discussion)",

"section": "3.5.2.3 Activity/Discussion"

}

},

"source": [

"### 3.5.2.3 Activity/Discussion\n",

"* Does the number of iterations depend on the starting point for this problem?\n",

"* How many iterations are needed for Newton's method to converge for a positive definite quadratic program using exact second derivative information?\n",

"* Why does the SR1 update not converge to the true Hessian?"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.3 Test Case: Example 2.19](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.3-Test-Case:-Example-2.19)",

"section": "3.5.3 Test Case: Example 2.19"

}

},

"source": [

"## 3.5.3 Test Case: Example 2.19\n",

"\n",

""

]

},

{

"cell_type": "code",

"execution_count": 7,

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.3 Test Case: Example 2.19](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.3-Test-Case:-Example-2.19)",

"section": "3.5.3 Test Case: Example 2.19"

}

},

"outputs": [],

"source": [

"## Define Python function to calculate objective\n",

"def my_f_ex_2_19(x,verbose=False):\n",

" ''' Evaluate function given above at point x\n",

"\n",

" Inputs:\n",

" x - vector with 2 elements\n",

" \n",

" Outputs:\n",

" f - function value (scalar)\n",

" '''\n",

" # Constants\n",

" a = np.array([0.3, 0.6, 0.2])\n",

" b = np.array([5, 26, 3])\n",

" c = np.array([40, 1, 10])\n",

" \n",

" # Intermediates. Recall Python indicies start at 0\n",

" u = x[0] - 0.8\n",

" s = np.sqrt(1-u)\n",

" s2 = np.sqrt(1+u)\n",

" v = x[1] -(a[0] + a[1]*u**2*s-a[2]*u)\n",

" alpha = -b[0] + b[1]*u**2*s2+ b[2]*u # September 5, 2018: changed 's' to 's2'\n",

" beta = c[0]*v**2*(1-c[1]*v)/(1+c[2]*u**2)\n",

" f = alpha*np.exp(-beta)\n",

" \n",

" if verbose:\n",

" print(\"##### my_f at x = \",x, \"#####\")\n",

" print(\"u = \",u)\n",

" print(\"sqrt(1-u) = \",s)\n",

" print(\"sqrt(1+u) = \",s2)\n",

" print(\"v = \",v)\n",

" print(\"alpha = \",alpha)\n",

" print(\"beta = \",beta)\n",

" print(\"f(x) = \",f)\n",

" print(\"##### Done. #####\\n\")\n",

" \n",

" return f"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.3.1 $x_0$ somewhat near solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.3.1-$x_0$-somewhat-near-solution)",

"section": "3.5.3.1 $x_0$ somewhat near solution"

}

},

"source": [

"### 3.5.3.1 $x_0$ somewhat near solution\n",

"\n",

"Consider:\n",

"$$x_0 = [0.3, 0.1]^T$$"

]

},

{

"cell_type": "code",

"execution_count": 8,

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.3.1 $x_0$ somewhat near solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.3.1-$x_0$-somewhat-near-solution)",

"section": "3.5.3.1 $x_0$ somewhat near solution"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Iter. \tf(x) \t\t||grad(x)|| \t||p|| \t\tmin(lambda)\n",

"0 \t-3.6022e-02 \t8.3847e-01 \t8.3847e-01 \t 1.0000e+00\n",

"1 \t-4.1276e-02 \t4.7785e-01 \t3.0223e-01 \t 1.0000e+00\n",

"2 \t-2.2301e+00 \t2.1236e+01 \t3.0865e-01 \t-6.8856e+01\n",

"3 \t-4.1260e-02 \t4.4645e-01 \t1.3053e-01 \t-6.7478e+01\n",

"4 \t-1.0354e-01 \t1.1855e+00 \t1.5062e-01 \t-7.1805e+01\n",

"5 \t-3.7115e-02 \t3.9982e-01 \t1.3096e-02 \t-5.8783e+01\n",

"6 \t-3.4039e-02 \t3.6164e-01 \t5.9865e-02 \t-1.4365e+01\n",

"7 \t-2.2398e-02 \t2.0377e-01 \t4.2988e-02 \t-9.5209e+00\n",

"8 \t-1.7133e-02 \t1.1914e-01 \t4.2522e-02 \t-6.4394e+00\n",

"9 \t-1.4292e-02 \t5.7282e-02 \t2.4810e-02 \t-4.8015e+00\n",

"10 \t-1.3542e-02 \t2.5813e-02 \t1.0353e-02 \t-2.8612e+00\n",

"11 \t-1.3373e-02 \t1.0933e-02 \t8.4091e-03 \t-1.6117e+00\n",

"12 \t-1.3325e-02 \t7.7765e-04 \t7.5906e-04 \t-1.6030e+00\n",

"13 \t-1.3324e-02 \t1.7106e-05 \t1.4129e-05 \t-1.5761e+00\n",

"Done.\n",

"x* = [0.80557705 0.96556999]\n",

"\n",

"SR1 Hessian approximation. B[k] =\n",

"[[-1.48333926 -0.21589307]\n",

" [-0.21589307 -1.07333656]] \n",

"\n",

"Eigenvalues: [-1.57605484+0.j -0.98062097+0.j] \n",

"\n",

"True Hessian at x*. B =\n",

"[[-1.47002939 -0.20602227]\n",

" [-0.20602227 -1.06561938]] \n",

"\n",

"Eigenvalues: [-1.55649728+0.j -0.9791515 +0.j] \n",

"\n"

]

}

],

"source": [

"# Create a Lambda (anonymous) function for gradient calculation\n",

"calc_grad = lambda x : my_grad_approx(x,my_f_ex_2_19,1E-6,verbose=False)\n",

"\n",

"# Specify starting point\n",

"x0 = np.array([0.3, 0.1])\n",

"\n",

"# Call optimization routine\n",

"x,f,p,B = alg1_sr1(x0,my_f_ex_2_19,calc_grad,eps1,eps2,verbose=False,max_iter=250);\n",

"\n",

"# SR1 Hessian approximation\n",

"print(\"\\nSR1 Hessian approximation. B[k] =\")\n",

"analyze_hes(B[-1])\n",

"\n",

"# Actual Hessian\n",

"print(\"True Hessian at x*. B =\")\n",

"analyze_hes(my_hes_approx(x[-1],calc_grad,1E-6))"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.3.2 $x_0$ far from solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.3.2-$x_0$-far-from-solution)",

"section": "3.5.3.2 $x_0$ far from solution"

}

},

"source": [

"### 3.5.3.2 $x_0$ far from solution\n",

"\n",

"Consider:\n",

"$$x_0 = [-0.1, 0.2]^T$$"

]

},

{

"cell_type": "code",

"execution_count": 9,

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.3.2 $x_0$ far from solution](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.3.2-$x_0$-far-from-solution)",

"section": "3.5.3.2 $x_0$ far from solution"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Iter. \tf(x) \t\t||grad(x)|| \t||p|| \t\tmin(lambda)\n",

"0 \t-4.5540e-04 \t9.2580e-03 \t9.2580e-03 \t 1.0000e+00\n",

"1 \t-5.4879e-04 \t1.0959e-02 \t5.9578e-02 \t-1.8395e-01\n",

"2 \t-1.5604e-04 \t3.4877e-03 \t2.7627e-02 \t-1.2568e-01\n",

"3 \t-8.3235e-05 \t1.9563e-03 \t3.5000e-02 \t-5.5390e-02\n",

"4 \t-3.6006e-05 \t9.0023e-04 \t2.9559e-02 \t-3.0011e-02\n",

"5 \t-1.7097e-05 \t4.4964e-04 \t2.9246e-02 \t-1.5078e-02\n",

"6 \t-7.9092e-06 \t2.1835e-04 \t2.7389e-02 \t-7.7836e-03\n",

"7 \t-3.7242e-06 \t1.0745e-04 \t2.6349e-02 \t-3.9664e-03\n",

"8 \t-1.7531e-06 \t5.2702e-05 \t2.5208e-02 \t-2.0268e-03\n",

"Done.\n",

"x* = [-0.11328951 -0.05033867]\n",

"\n",

"SR1 Hessian approximation. B[k] =\n",

"[[ 1.00141633e+00 -4.82365647e-02]\n",

" [-4.82365647e-02 2.91945446e-04]] \n",

"\n",

"Eigenvalues: [ 1.00373512+0.j -0.00202684+0.j] \n",

"\n",

"True Hessian at x*. B =\n",

"[[ 6.99115848e-05 -2.18301460e-04]\n",

" [-2.18301460e-04 -7.02712139e-04]] \n",

"\n",

"Eigenvalues: [ 0.00012733+0.j -0.00076013+0.j] \n",

"\n"

]

}

],

"source": [

"# Specify starting point\n",

"x0 = np.array([-0.1, 0.2])\n",

"\n",

"# Call optimization routine\n",

"x,f,p,B = alg1_sr1(x0,my_f_ex_2_19,calc_grad,eps1,eps2,verbose=False,max_iter=250);\n",

"\n",

"# SR1 Hessian approximation\n",

"print(\"\\nSR1 Hessian approximation. B[k] =\")\n",

"analyze_hes(B[-1])\n",

"\n",

"# Actual Hessian\n",

"print(\"True Hessian at x*. B =\")\n",

"analyze_hes(my_hes_approx(x[-1],calc_grad,1E-6))"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 3,

"link": "[3.5.3.3 Activity/Discussion](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.3.3-Activity/Discussion)",

"section": "3.5.3.3 Activity/Discussion"

}

},

"source": [

"### 3.5.3.3 Activity/Discussion\n",

"* Classify each candidate solution.\n",

"* Is the SR1 approximation always positive definite?"

]

},

{

"cell_type": "markdown",

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.4 Broyden update with Cholesky factorization](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.4-Broyden-update-with-Cholesky-factorization)",

"section": "3.5.4 Broyden update with Cholesky factorization"

}

},

"source": [

"## 3.5.4 Broyden update with Cholesky factorization\n",

"As part of Algorithms Homework 3, you will adapt this example and implement the BFGS (Broyden-Fletcher-Goldfarb-Shanno) update formula. \n",

"\n",

"\n",

"You may decide to use the Cholesky factorization of $B^{k}$,\n",

"\n",

"$$B^{k} = L^{k} (L^{k})^T,$$\n",

"\n",

"to make your BFGS update more efficient. (This is not required). Let's see how to do Cholseky factorization with SciPy."

]

},

{

"cell_type": "code",

"execution_count": 10,

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.4 Broyden update with Cholesky factorization](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.4-Broyden-update-with-Cholesky-factorization)",

"section": "3.5.4 Broyden update with Cholesky factorization"

}

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"B = \n",

" [[ 1.00098448 0.00242031 -0.01329946]\n",

" [ 0.00242031 1.00595022 -0.03269612]\n",

" [-0.01329946 -0.03269612 1.17966326]] \n",

"\n",

"L = \n",

" [[ 1.00049212 0. 0. ]\n",

" [ 0.00241912 1.00296778 0. ]\n",

" [-0.01329292 -0.03256731 1.08555328]] \n",

"\n",

"L*L.T = \n",

" [[ 1.00098448 0.00242031 -0.01329946]\n",

" [ 0.00242031 1.00595022 -0.03269612]\n",

" [-0.01329946 -0.03269612 1.17966326]] \n",

"\n"

]

}

],

"source": [

"## Create random P.D. and symmetric matrix\n",

"B_ = np.eye(3) + xxT(np.random.normal(0,1,3))\n",

"print(\"B = \\n\",B_,\"\\n\")\n",

"\n",

"## Perform Cholesky factorization. \n",

"# By default, lower=False and L is upper triangular. Either works here,\n",

"# but we prefer L to be lower triangular for convention.\n",

"L = linalg.cholesky(B_,lower=True)\n",

"print(\"L = \\n\",L,\"\\n\")\n",

"\n",

"## Reconstruct B\n",

"print(\"L*L.T = \\n\",L.dot(L.T),\"\\n\")"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"nbpages": {

"level": 2,

"link": "[3.5.4 Broyden update with Cholesky factorization](https://ndcbe.github.io/CBE60499/03.05-Quasi-Newton-Method.html#3.5.4-Broyden-update-with-Cholesky-factorization)",

"section": "3.5.4 Broyden update with Cholesky factorization"

}

},

"outputs": [],

"source": []

},

{

"cell_type": "markdown",

"id": "264f8875",

"metadata": {},

"source": [

"\n",

"< [3.4 Newton-type Methods for Unconstrained Optimization](https://ndcbe.github.io/CBE60499/03.04-Netwon-Methods.html) | [Contents](toc.html) | [Tag Index](tag_index.html) | [3.6 Descent and Globalization](https://ndcbe.github.io/CBE60499/03.06-Globalization.html) >

"

]

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.8.6"

}

},

"nbformat": 4,

"nbformat_minor": 2

}

"

]

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.8.6"

}

},

"nbformat": 4,

"nbformat_minor": 2

}